Atomic reference counting (with Zig code samples)

Referencing counting is probably the oldest memory management technique in existence. It is widely used, easily to understand and explain and in most cases does the Right Thing.

There are edge cases and nasty scenarios, but for the most part, it works. At least, as long as you are running as a single threaded program. Here is what a reference counting scheme looks like:

However, the moment that we have any form of concurrency, the simplicity goes right out the window. Consider the call to add_ref vs. release. Both of them need a reference to a valid object. However, what happens if we have the following sequence of events?

- We have an object whose reference count is set to 1.

- Thread #1 gets the address of the object.

- Thread #2 now calls to release();

- There are no more references to the object and it is freed.

- Thread #1 now calls to add_ref();

- It is passing the address of the recently released object, meaning that we have undefined behavior.

This is a hard problem, because we need to keep the reference count somewhere, and we also need to release the resource as soon as there are no more references to it.

The more generic term for this issue is the ABA problem.

In literature, there are a lot of attempts to solve this issue:

- Hazzard pointers

- GC

- Epochs

They are complex solution to the problem, but mostly because we have two distinct steps here. First we get a pointer to the ref counted value, then we need to change that, but we need to do that safely with concurrent releases. One way of ensuring that this works is to take a lock around the entire operation (acquire the pointer & add ref) and take the same lock for release. That is a somewhat heavy weight approach.

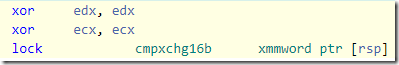

It is also pretty much completely redundant on any modern system. Any x86/x64 CPU released in the past decade will have support this assembly instruction:

The cmpxchg16b instruction allow us to do an atomic operation on a value that is 128 bits long (16 bytes). That is important, because that means that we can break apart the stages above. We can store both the pointer and its counter in a single location, and operate on them atomically.

This is called the DCAS (double compare and swap), which greatly simplify the problem. To the point where there is really no reason to want to use anything else.

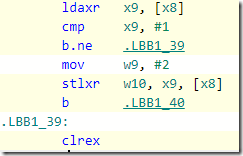

Except… if you care about ARM systems. There is no comparable instruction to this on ARM, and given how common those machines are, that seem to point us right back to the complexities of hazard pointers and epochs. Of course, ARM has 64 bits atomic instructions, as you can see here, for example:

But our pointer is also 64 bits, so that doesn’t really help us that much, does it? Interesting tidbit here, however, the pointers we use aren’t actually 64 bits in size. They are just 48 bits, in truth. That is for both x64 and for AArch64. That means that you can target only a maximum of 256TB of RAM, but there are no machines that big right now (the biggest that I’m aware of are 10% of this size and are expensive). Given that this is a CPU limit, we can probably assume that this isn’t going away soon and that when it does, ARM will likely have a 128 bits atomic instruction.

That means that a 64 bits instruction can give us a 16 bits of free space to work with. But we can do better. Let’s assume that we get our pointers from malloc(), or a similar call. We know that malloc() is required to return the data aligned on max_align_t size. For 64 bits, that is 16. In other words, we have full 20 bits to play with that we can utilize for our needs, while preserving the original pointer value. If we are using page aligned pointers, however, we can use 28(!) bits out of the 64 of the pointer value, for that matter.

Let’s see how we can take that assumption and turn that into something usable. I’m going to use Zig here, because I like the language and it gives us a succinct manner with which to work with native code. The first thing to do is to define how we are going to overall structure:

The size of this structure is 12 bytes, and I’m using Zig’s arbitrary precision integers to help us pack the data into a single u64 value, without having to write all the bit shifts manually. All the other functions that I’m showing here are going to be inside the generic structure. I’m asserting the size and that the T that we are working on is a pointer of some kind. You can see that the structure is also ready to be marked as an error, so we have three possible states:

- Empty – no value is stored inside

- Errored – we’ll just retain the error code

- Value – we have a value and we keep the reference count in the references field. Note that this is a 19 bits field, so we have a maximum of 512K outstanding references for the counter. For pretty much all needs, I believe that this will be sufficient.

In addition to the data itself, we also have the notion of a version field. That one is needed because we want to allow the caller to wait for the value to become available. Let’s see how we can get a value from this?

What I’m doing here is to get the current value, do some basic checks (do we have a value, was an error registered, etc). Then we increment the reference as well as ensure that we don’t overflow it. Finally, we publish the new value using cmpxchng call. Note that the whole thing is in a while loop, to ensure that if the cmpxchng fails, we’ll retry. If we were able to update the reference count, we turn the compacted value into its original form and return that to the user. Because we get the pointer value and increment the reference count as a single step, we are ensured that you cannot end up with missing the release call.

The tryAcquire() call also have a sibling, which will wait for the value to become available, it looks like this:

If the value does not exists (if there is an error, we’ll return immediately), we’ll wait using the Futex.wait() call. This is why we need the version field. It allows us to properly wait without requiring to create kernel level objects. Let’s see how we set the new value, potentially concurrently with threads that want to get to it as well.

We have some convenience functions to make it clear what it is that we are actually setting (an error or a value) and then we get to the meat of this structure, the set() call. There isn’t much here, to be honest. We check that the value isn’t already set, and then set it properly as either an error or a value with a single reference (which is for the caller).

We again use cmpxchng() call to ensure that we are safe in regard to multiple threads (although the usage I have in mind for this calls for a single writer, not competing ones, it doesn’t cost us anything to make it safer to use). After setting the value, we increment the version field and wake any waiting threads.

You can also see how we validate and pack the pointer value to 44 bits.

All of this work, but we are still missing one part. The whole point of reference counting is to delete the value when it it no longer in use, where is that code at? Let’s take a look:

We are taking both the value that we are releasing and the destruction function. The value we want to free is there to ensure that it wasn’t modified meantime, and if there are no more references, we know that we can safely destroy it.

Note that this whole scheme relies on the fact that we are managing the reference count externally to the object itself. In other words, we are assuming that the RefCount value is going to be kept alive. In my case, I”m actually intending to use that as a cache, and we’ll keep an array of those values around for a long time. Otherwise, you run the same risk as before. If you have a reference to the RefCount value, and you may release that, you may end up with a situation where you have a reference to a released memory.

This technique of pointer packing is valid if you use manual memory management mode, you cannot use that in C#, for example, because object may move, and even if you pinned a value, the GC will not consider the value to be a valid pointer, so it will not work for you. For managed languages, there is actually a much better option. Just let go of the value and let the finalizer handle that. In other words, something else (an already existing component) will ensure that there are no live references remaining).

Woah, already finished? 🤯

If you found the article interesting, don’t miss a chance to try our database solution – totally for free!