High performance .NET: Building a Redis Clone–Analysis II

I’m going to go back a few steps and try to see where I should be looking at next, to see where I should pay the most attention. So far in this series, I mostly focused on how we read and process the data. But I think that we ought to take a step or two back and see where we are at in general. I ran the version with Pipelines and string usage in the profiler, trying to see where we are at. For example, in a previous post, the ConcurrentDictionary that I was using had a big performance cost. Is that still the case now?

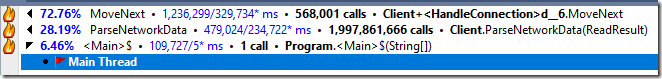

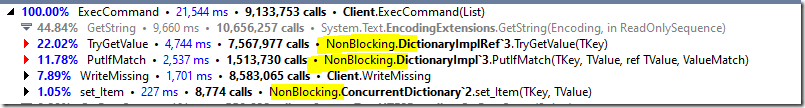

Here are the current hotspots in the codebase:

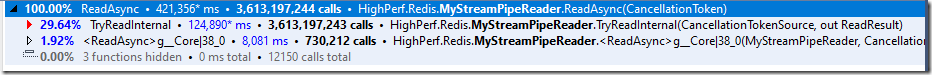

Looking at this with more detail, we have:

That is… interesting. Let’s look at the code for HandleConnection right now?

Looking at the code and the profiler results, I wonder if I can do better here. Here is a small change that gives me ~2% speed boost:

The idea is that we parallelize reading from and writing to the network. It is a small boost, but any little bit helps, especially once we get into the cascading impacts of repeated optimizations.

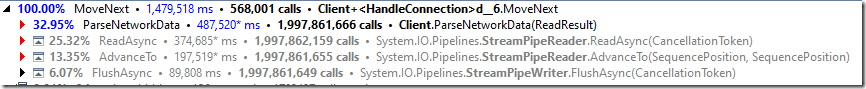

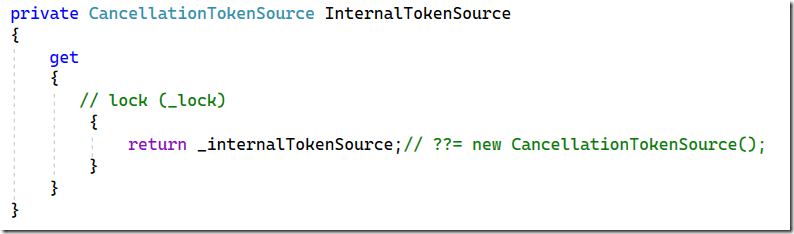

Looking into this, we have almost two billion calls to ReadAsync, let’s see what is costly there:

That is… wow.

Why is InternalTokenSource so expensive? I’m willing to bet that the issue is this one, it is taking a lock. In my use case, I know that there is a single thread running this, so it is worth seeing if I can skip it. Unfortunately, there isn’t an easy way to skip that check. Fortunately, I can copy the code from the framework and modify it locally, to see what the impact of that would be. So I did just that (initialized once in the constructor):

Of course, that is very much a brute force approach, and not one that I would recommend. Looking at the code, it looks like there is a reason for this usage (handling cancellation of operations), but I’m ignoring that for now. Let’s see what the profiler says now:

That means that we have about 40% improvements in per call costs. As I mentioned, that is not something that we can just do, but it is an interesting observation on the cost of reading using PipeReader.

Another aspect that is really interesting is the backend state we have, which is a ConcurrentDictionary. If we’ll look at its cost, we have:

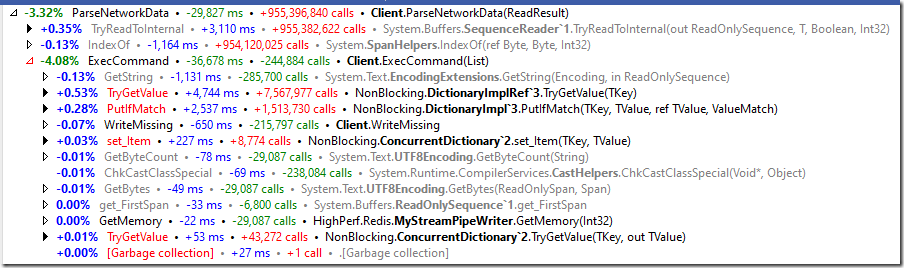

You’ll note that I’m using the NonBlocking NuGet package, which provides a ConcurrentDictionary implementation that isn’t using locking. If we’ll use the default one from the framework, which does use locking, we’ll see:

You can see the difference costs better here:

Note that there is a significant cost difference between the two options (in favor of NonBlocking). But it doesn’t translate to much of a difference when we run a real world benchmark.

So what is next?

Looking at the profiler result, there isn’t really much that we can push forward. Most of the costs we have are in the network, not in the code we run.

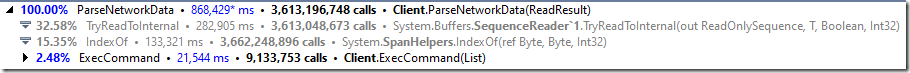

Well, that isn’t quite true, is it? The bulk of our code is in ParseNetworkData call, which looks like this:

So the total time we spend actually executing the core functionality of our server is really negligible. A lot of time is actually spent parsing the commands from the buffer. Note that here, we don’t actually do any I/O, all operations are operating on buffers in memory.

The Redis protocol isn’t that friendly for machine parsing, requiring us to do a lot of lookups to find the delimiters (hence the IndexOf() calls). I don’t believe that you can significantly improve on this. This means that we have to consider other options for better performance.

We are spending 35% of our runtime in parsing the command streams from the client, and the code we execute is less than 1% of our runtime. I don’t think that there are significant optimization opportunities remaining for the stream parsing, so that leaves us with the I/O that we have left. Can we do better?

We are currently using async I/O and pipelines. Looking at the project that got me interested in this topic, it is using IO_Uring (via this API) on Linux for their needs. Their parsing is straightforward, as well, see here. Quite similar to the manner in which my code operates.

So to get to the next stage in performance (as a reminder, we are now at the 1.8 million req / sec) we’ll probably need to go to the ring based approach as well. There is a NuGet package to support it, but that moves this task from something that I can spend a few hours in an evening to a couple of days / full week of effort. I don’t think that I’ll pursue this in the near future.

Woah, already finished? 🤯

If you found the article interesting, don’t miss a chance to try our database solution – totally for free!