Implementing a file pager in Zig: Rethinking my approach

After writing the post about handling chunk metadata, I started thinking about the overall approach. Both the method using compressed pointers and the baseline computation felt… off to me. They were certainly workable, but it was too complex and felt fragile.

I don’t like dealing with a high level of complexity, I would rather put a lot of effort into simplifying the solution. The overall approach may be complex, but the system should be nice to work with. Usually, we can get away with a great deal of simplification if we accept some constraints on what we want to do with the system. For now, I’m going to assume the following constraints:

- We are using 64 bits OS (and can assume effectively unlimited address space).

- We want to go with a file pager (instead of the memory mapped one) because I want to be able to control the I/O behavior better.

- The files we use are limited to 8 GB in size (can use more than a single file, of course).

The last one deserves some additional words. When thinking about a storage solution, accepting a maximum size is generally a bad idea (640KB, anyone?). However, if we decide that our storage solution is going to be composed of files of specific size, we can combine them to reach any size needed.

But why accept this limitation? Why say that a single file will not exceed 8 GB? It turns out that this has several advantages.

Let’s assume that we have a dataset that is 100GB in size, using 8 GB files, that would be 13 files to a total of 104 GB of used disk space. Now we want to delete some of that data. What do we do with the actual used disk space? It is actually quite hard to release disk space back to the operating system if you have a single file. You might need to run compaction of the data, or use advanced API such as hole punching (see FALLOC_FL_PUNCH_HOLE). Advanced API is something that I would like to avoid, too easy to fall into some pitfall that no one else has run into. Working with sparse files (with holes in them) also typically requires you to utilize dedicated tools and can be awkward. If we split the data into separate files, we can retain most of the same benefits, and give ourselves a simpler environment for the user to work with.

With the 8GB limitation in place, I can choose to manage the paging using the following manner:

The idea is pretty simple. Instead of trying to stitch together the memory for the file, we are going to just allocate a single 8GB range of virtual memory. This can be done using the following command:

This reserves (but does not use) 8GB of address space. We can now allocate ranges from that safely. This is important because if we have a request to two sequential chunks, they will reside in memory right next to one another. Note that we also don’t need to handle any pointers, since we can rely on a stable base address for the whole file. The nice thing about this is that we aren’t actually allocating memory, just reserving it.

Let’s see how that will work? The chunks array is used to control references to the chunks in the file. The chunk metadata is a 64 bits value that has several responsibilities at the same time. It stores the tag of a chunk, which indicate its status (loaded, error, empty, etc) and the number of outstanding references to the chunk. That uses up 34 bits in the value, the rest of the bits are used as a version field, which is incremented on each change. That allows us to avoid the ABA problem. The actual data, of course, is managed using the ptr value.

Here is how we can get a chunk from this struct:

What we are doing here is checking that the value is loaded to memory, and if it is, we increment the reference and then return it. This code runs in a loop, because we assume that multiple threads may run it in the same time. This handles just getting data that is already loaded. If the data isn’t loaded, what will happen? We’ll get a null back. Here is the blocking version of this method:

Just based on those two methods, you should be able to draw some conclusions. If the value isn’t loaded, we’ll always return null, but there is this Loading stage as well, in that case, we may want to wait for it. How is that going to work?

This works using two important functions: markLoading() and markLoaded(), the idea is that we’ll first try to call tryGet() to load a chunk, if there is no value, we need to load it from disk. At that point, remember, there may be multiple threads accessing the relevant chunk. So all of them would be competing on the markLoading function, like so:

The code itself is pretty simple, we are updating the tag of the chunk and try to update it optimistically. We are moving the state of the chunk from Empty to Loading in a thread safe manner. If we are successful in doing so, we know that we are the only thread that owns the loading portion of the chunk. Note that part of the markLoading process is to ask the OS to give us the memory for the chunk (in the range that we previously allocated).

At this point, we can load the data from disk somehow and then we’ll call the markLoaded function, which completes the process:

The idea is that we are splitting the responsibility for managing the chunks references from how we load the data to memory.

In other words, the expected usage of this struct is something like this:

- Call tryGet() a page in a given chunk.

- If successful, do the work you wanted to do.

- If not successful, compete to be the loader for this data by calling markLoading().

- If you lost, call getBlocking() to wait for the winner to get the data.

- Somehow, load the data from the disk and call markLoaded().

- Proceed to make use of the data.

Another important aspect that we have to deal with is when we want to discard the data. Basically, if we filled our memory budget and we need to load a value from the disk, what can we do then? The answer is that we need to evict the data somehow, before we can do that, we need to know what data is currently in use. That is why we have the calls to addRef() and release(). We use those (using atomic operations) to track the usage of the various chunks. When we need to evict data from memory, we’ll need to have some sort of a policy to do so. I’m deferring the actual policy to a later point in time, right now I want to discuss how do we know what we can evict and how that is going to work.

Here is the code to handle eviction, currently implementing a policy of simple scanning (not ideal by a long shot):

In the reclaim method, we are scanning through the chunks. To be able to reclaim a chunk, the following conditions need to hold:

- The chunk holds a value.

- There are no outstanding references to the chunk, only the pager is holding a reference to the chunk.

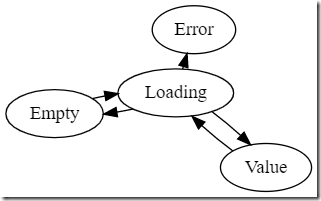

Note that in order to do this safely, we have to assume that while we are trying to reclaim a chunk, another thread is trying to use it. This behavior complicates our lives a bit. We handle that by doing a racy update of the chunk, trying to move it to a loading state. The idea is that the Loading state is meant to be used as a busy signal. While the chunk is in Loading state, the rest of the system knows that it cannot use this and needs to wait. Note that this means that we have the following transitions:

Most of the code that we have in the struct is there to handle concurrency from multiple threads dealing with the system at once, note. The actual behavior is fairly simple. We check if we can reclaim the chunk (no one is looking), we take a lock on by trying to move its state to Loading. Then we can discard the memory by calling mmap on the chunk’s memory with PROT_NONE.

For fun, we are using 2MB chunks because that fits well into huge pages. On a properly setup system, we can significantly reduce the paging metadata overhead inside the kernel by allocating a single 2MB page for each chunk.

You can see the entire implementation here. In the next post, I want to look into handling the I/O portion of reading the data from the disk. After that we’ll talk about how we can implement a proper eviction policy.

Woah, already finished? 🤯

If you found the article interesting, don’t miss a chance to try our database solution – totally for free!