Looking into Corax’s posting lists: Part II

In a previous post (which went out a long time ago) I explained that we have the notion of a set of uint64 values that are used for document IDs. We build a B+Tree with different behaviors for branch pages and leaf pages, allowing us to pack a lot of document IDs (thousands or more) per page.

The problem is that this structure hold the data compressed, so when we add or remove a value, we don’t know if it exists already or not. That is a problem, because while we are able to do any relevant fixups to skip duplicates and erase removed values, we end up in a position where the number of entries in the set is not accurate. That is a Problem, with a capital P, since we use that for query optimizations.

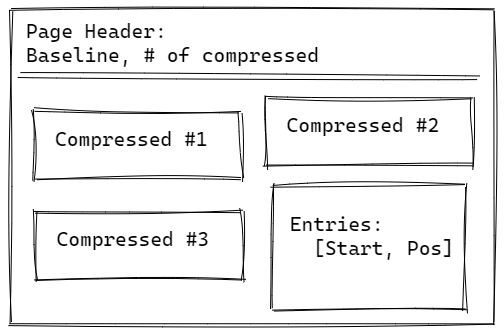

The solution for that is to move to a different manner of storing the data in the leaf page, instead of going with a model where we add the data directly to the page and compress when the raw values section overflows, we’ll use the following format instead:

Basically, I removed the raw values section from the design entirely. That means that whenever we want to add a new value, we need to find the relevant compressed segment inside the page and add to it (potentially creating a page split, etc).

Obviously, that is not going to perform well for write. Since on each addition, we’ll need to decompress the segment, add to it and then compress it again.

The idea here is that we don’t need to do that. Instead of trying to store the entries in the set immediately, we are going to keep them in memory for the duration of the transaction. Just before we commit the transaction, we are going to have two lists of document IDs to go through. One of added documents and one of removed documents. We can then sort those ids and then start walking over the list, find the relevant page for each section in the list, and merging it with the compressed values.

By moving the buffering stage from the per-page model to the per-transaction model, we actually gain quite a lot of performance, since if we have a lot of changes to a single page, we can handle compression of the data only once. It is a very strange manner of working, to be honest, because I’m used to doing the operation immediately. By delaying the cost to the end of the transaction, we are able to gain two major benefits. First, we have a big opportunity for batching and optimizing work on large datasets. Second, we have a single code path for this operation. It’s always: “Get a batch of changes and apply them as a unit”. It turns out that this is far easier to understand and work with. And that is for the writes portion of Corax.

Remember, however, that Corax is a search engine, so we expect a lot of reads. For reads, we can now stream the results directly from the compressed segments. Given that we can usually pack a lot of numbers into a segment, and that we don’t need to compare to the uncompressed portion, that ended up benefiting us significantly on the read side as well, surprisingly.

Of course, there is also another issue, look at the Baseline in the Page Header? We’ll discuss that in the next post, turned out that it wasn’t such a good idea.

Woah, already finished? 🤯

If you found the article interesting, don’t miss a chance to try our database solution – totally for free!