Optimizing local and distributed transactions with batching

I got into a good discussion about how RavenDB implements some optimizations with transaction handling. The details got big enough (and hopefully interesting enough) that they warrant their own post.

When we are talking about transactions, one of the key factors in the cost of a transaction is the amount of time that it takes to persist that. This is different for local and distributed transactions.

For a local transaction, we can consider the transaction committed if it is durably stored on the disk.

For a distributed transaction, we can consider the transaction committed if it is durably stored on a majority of the disks in the cluster.

That factor right there is the primary reason why a distributed transaction is more expensive. For a local transaction, you are waiting for the disk. For a distributed transaction, you are waiting for multiple disks and the network.

One of the core optimizations for speeding up transactions is the ability to batch things. The cost of writing to the disk is more or less the same, regardless of how much you write (within an order of magnitude or so). In other words, writing 8 KB and writing 32 KB has pretty much the same cost. Writing 1 MB and writing 100 MB does not, but writing 1 MB vs 4 MB isn’t meaningfully different (sequential durable write, which is what we care for in the case of transactions).

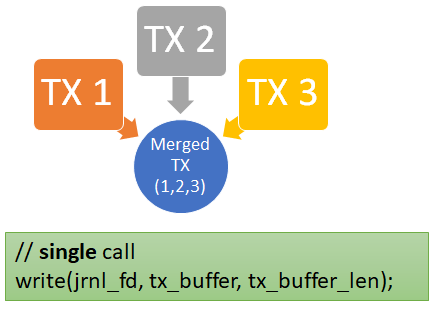

The point of this post is how this is actually handled. RavenDB utilizes a process called transaction merging to reduce the number of times that we have to go to the disk. Concurrent transactions will be bundled into the same write call, massively increasing our throughput. To give you some context, without transaction merging, you can peak at a few hundreds transactions per second. With transaction merging, you can jump to high thousands of transactions per second. Here is how this works:

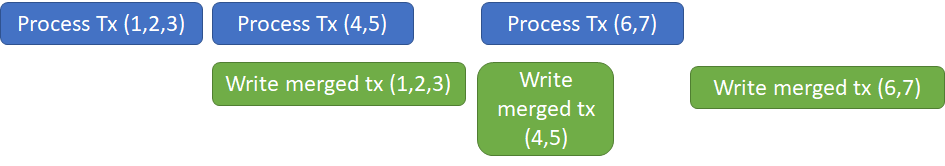

RavenDB actually takes this further, in addition to transaction merging, we also apply something we call async commit. Take a look at the following timeline:

A transaction is actually composed of two separate steps. First we need to execute whatever commands we have in the transaction, then we have to write the transaction changes to disk.

RavenDB is able to start processing the next transaction as soon as the previous one started the write to the disk. The idea is to parallelize compute and I/O, and we are able to benefit greatly as a result. Note that this is safe to do, since the next transaction won’t be committed until the prior transaction has been durably stored.

How does this work in practice? Whenever we have a new transaction, we add it to a queue. A dedicated thread will merge those transactions and pull them from the queue, running the separate transactions as one big operation. When we run out of pending transactions or hit certain size / timing limits, we’ll commit the merged transaction and start working on the next one while the commit is completing in the background.

There are certain algorithms that try to maximize throughput, such as Nagle. They do that by waiting for additional transactions to arrive before actually going to the disk. RavenDB doesn’t use that approach. If a system is idle and we get a single transaction, we’ll immediately execute and commit it.

But the fact that we don’t explicitly do Nagle doesn’t mean that it isn’t useful. Because we have to wait for the disk, what ends up happening is that under load, we start getting more pending transactions in the queue. Which will then be executed as a merged unit. In other words, RavenDB implements a dynamic batching approach, affected by the actual I/O constraints and the load on the system. If we have independent transactions, we’ll execute them immediately. As the load increases, we’ll start making more merged transactions. This way we can keep a fairly consistent response time even when the load of the system grows by leaps and bounds.

The situation is similar when we are talking about distributed transactions. RavenDB uses the Raft protocol for managing its distributed behavior. I’m going to focus just on the batching aspect of the behavior. RavenDB will send an AppendEntries message to the other members in the cluster every 200 ms or so. However, if we have a new command to send to the cluster, it will go out immediately over the network. An important factor here is that we are using TCP, and we require acknowledgment from the other side before we send the next message. As a result of those behaviors, we have pretty much the same situation. Depending on the network latency and the processing time, we’ll send more entries in a single roundtrip.

In short, the overall behavior for RavenDB is that we’ll start the operation immediately on the first action (both for disk and network), and then we’ll batch anything that happens while the first operation is in flight and send that as a result.

After over a decade of working in this manner, I can tell that this has proven to be a highly adaptable system that results in the minimum number of knobs to mess with. It favors latency over throughput when there isn’t a lot of activity and shifts toward favoring throughput over latency as the load grows.

Woah, already finished? 🤯

If you found the article interesting, don’t miss a chance to try our database solution – totally for free!