Production postmortem: Deduplicating replication speed

A customer called us with an interesting issue. They have a decently large database (around 750GB or so) that they want to replicate to another node. They did all the usual things that you need to do and the process started running as expected. However… that wouldn’t make for an interesting postmortem post if everything actually went right…

A customer called us with an interesting issue. They have a decently large database (around 750GB or so) that they want to replicate to another node. They did all the usual things that you need to do and the process started running as expected. However… that wouldn’t make for an interesting postmortem post if everything actually went right…

Their problem was that the replication stalled midway through. There were no resource limits, but the replication didn’t progress even though the network traffic was high. So something was going on, but it didn’t move the replication for some reason.

We first ruled out the usual suspects (replication issue causing a loop, bad network, etc) and we were left scratching our heads. Everything seemed to be fine, the replication was working, but at a rate of about 1 – 2 documents a minute. In almost 12 hours since the replication started, only about 15GB were replicated to the other side. That was way outside expectations, we assumed that the whole replication wouldn’t take this long.

It turns out that the numbers we got were a lie. Not because the customer misled us, but because RavenDB does some smarts behind the scenes that end up being pretty hard on us down the road. To get the full picture, we need to understand exactly what we have in the customer’s database.

Let’s say that you store data about Players in a game. Each player has a bunch of stats, characters, etc. Whenever a player gets an achievement, the game will store a screenshot of the achievement. This isn’t the actual scenario, but it should make it clear what is going on. As players play the game, they earn achievements. The screenshots are stored as attachments inside of RavenDB. That means that for about 8 million players, we have about 72 million attachments or so.

That explains the size of the database, of course, but not why we aren’t making progress in the replication process. Digging deeper, it turns out that most of the achievements are common across players (naturally), and that in many cases, the screenshots that you store in RavenDB are also identical.

What happens when you store the same attachment multiple times in RavenDB? Well, there is no point in storing it twice, RavenDB does transparent de-duplication behind the scenes and only stores the attachment’s data once. Attachments are de-duplicated based on their content, not their name or the associated document. In this scenario, completely accidentally, the customer set up an environment where they would upload a lot of attachments to RavenDB, which are then de-duplicated by RavenDB.

None of that is intentional, it just came out that way. To be honest, I’m pretty proud of that feature, and it certainly helped a lot in this scenario. Most of the disk space for this database was taken by attachments, but only a small number of the attachments are actually unique. Let’s do some math, then.

Total attachments’ size is: 700GB. There are about half a million unique attachments. There are a total of 72 million attachments. That means that the average size of an attachment is about 1.4MB or so. And the total size of attachments (without de-duplication) is over 100 TB.

I’ll repeat that again, the actual size of the data is 100 TB. It is just that RavenDB was able to optimize that using de-duplication to have significantly less on disk due to the pattern of data that is stored in the database.

However, that applies at the node level. What happens when we have replication? Well, when we send an attachment to the other side, even if it is de-duplicated on our end, we don’t know if it is on the other side already. So we always send the attachments. In this scenario, where we have so many duplicate attachments, we end up sending way too much data to the other side. The replication process isn’t sending 750GB to the other side but 100 TB of data.

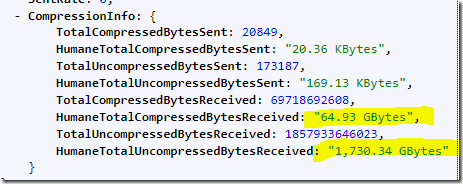

The customer was running RavenDB 5.2 at the time, so the first thing to do when we figured this out was to upgrade to RavenDB 5.3. In RavenDB 5.3 we have implemented TCP compression for internal data (replication, subscription, etc). Here are the results of this change:

In other words, we were able to compress the 1.7 TB we sent to under 65 GB. That is a nice improvement. But the situation is still not ideal.

De-duplication over the wire is a pretty tough problem. We don’t know what is the state on the other side, and the cost of asking each time can be pretty high.

Luckily, RavenDB has a relevant feature that we can lean on. RavenDB has to handle a scenario where the following sequence of events occurs (two nodes, A & B, with one way replication happening from A to B):

- Node A: Create document – users/1

- Node B: Replication document: users/1

- Node A: Add attachment to users/1 (also modifies users/1)

- Node B: Replication of attachment for users/1 & users/1 document

- Node A: Modify users/1

- Node B: Replication of users/1 (but not the attachment, it was already sent)

- Node B: Delete users/1 document (and the associated attachment)

- Node A: Modify users/1

- Node B: Replication of users/1 (but not the attachment, it was already sent)

- Node B is now in trouble, since it has a missing attachment

Note that this sequence of events can happen in a distributed system, and we don’t want to leave “holes” in the system. As such, RavenDB knows to detect this properly. Node B will tell Node A that it is missing an attachment and Node A will send it over.

We can utilize the same approach. RavenDB will now remember the last 16K attachments that it sent in the current connection to a node. If the attachment was already sent, we can skip sending it. But if it is missing on the other side, we fall back to the missing attachment behavior and send it anyway.

In a scenario like the one we face, where we have a lot of duplicated attachments, that can reduce the workload by a significant amount, without having to change the manner in which we replicate data between nodes.

Woah, already finished? 🤯

If you found the article interesting, don’t miss a chance to try our database solution – totally for free!