Production postmortem: The missed indexing reference

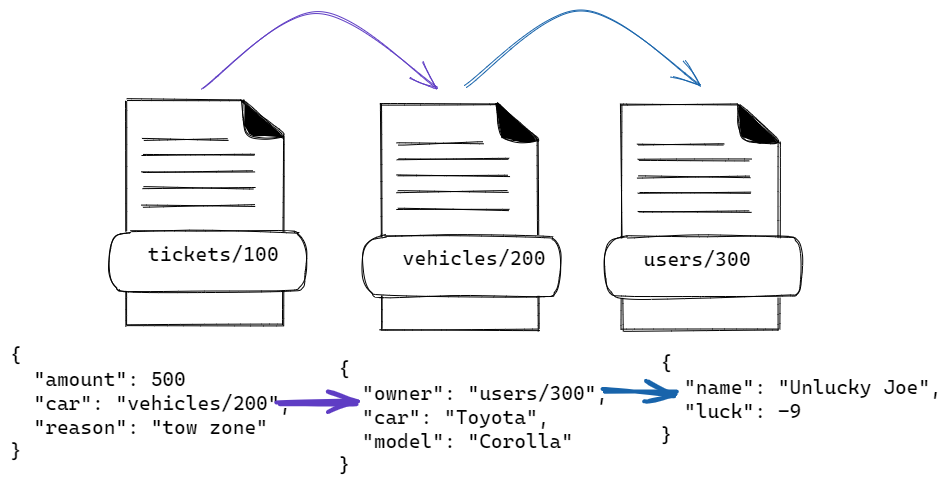

RavenDB has a really nice feature, it allows you to index data from related documents. Consider the following document structure:

We have tickets, vehicles, and users, and we want to issue a search on all the tickets issued to Joe. Leaving aside whether this is the proper way to handle this, here is what the index would look like:

What we are doing here is walk the reference graph and index data from related documents. So far, so good. The cool thing about this feature is that RavenDB is in charge of ensuring that if we update the owner of the vehicle or the name of the user, the Right Thing will happen.

Of course, I wouldn’t be writing this blog post if we didn’t run into a problem in this scenario.

The way it works, for each collection referenced by the index, RavenDB maintains a list of the last document that was chceked for changes in the collection. That way, on modification of a related document, we can tell that we need to re-index a particular document.

This looks something like this:

In other words, for each document that was loaded by another during indexing, we keep a list of the referencing documents.

Let’s say that we update document vehicles/200. That would be written to the storage with a new etag, and the index would wake up. It would ask to get all the documents in the Vehicles collection after etag 456, get vehicles/200 and then check the ReferencedBy and find that the document tickets/100 loaded it. At this point, it will re-index tickets/100 to ensure we have the latest values.

There is quite a bit more to this process, of course, I’m skipping on a lot of optimizations and detail work. For the purpose of this post, we don’t need any of that.

A customer reported that (very rarely), an index similar to the one above would “miss” on updates. That should not be possible. As much as I love this feature, conceptually, it is a very simple one, there isn’t much here that can fail. And yet, it did. Figuring out what was happening required us to look very deeply into the exact series of steps that were taken to produce this output. It turns out that our approach had a hole in it.

We assume that the writes would always happen in an orderly fashion. In other words, that the writes would be consistent. But there is no actual requirement for that.

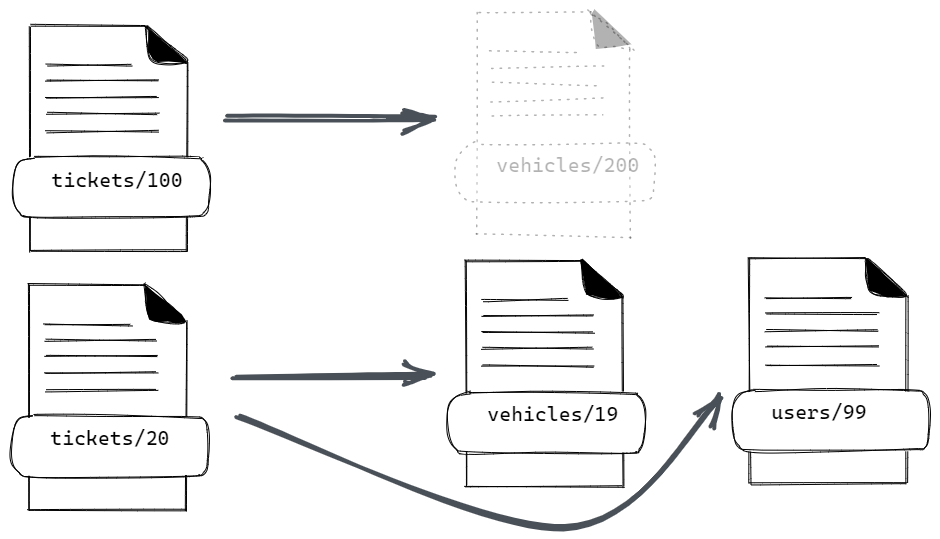

Consider what happens if I write just the ticket document to the database:

- RavenDB will index the ticket document

- It will attempt to load the associated vehicle, figure out that there is no such document and move on

- The related user document, of course, is not known at this point (since there is no vehicle document)

The end result is that we have the following data internally:

That is fine, when we’ll add the vehicle and the user, we’ll do the appropriate wiring, no?

In almost all cases, that is exactly what will happen. However, consider the metadata above. We are concerned here with tickets/100, but there is also tickets/20, whose references exist properly. So the structure we have right now in terms of reference tracking is:

It’s important to note that the references are always kept from the initial ‘tickets’ document. So even though the path from tickets/20 to users/99 goes through vehicles/19, the relationship is a direct association.

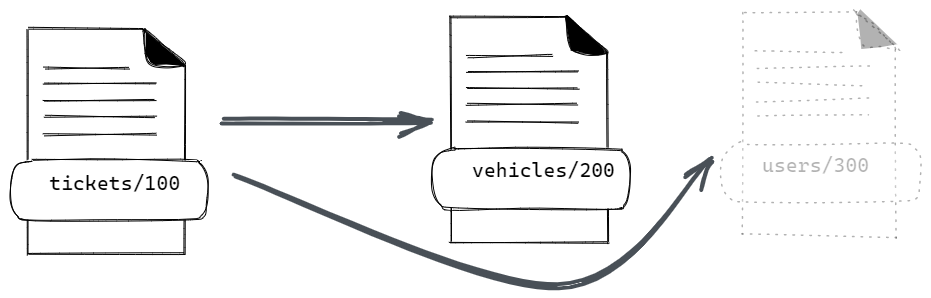

What will happen if we’ll insert just the users/300 document now? Well, there is no reference to this document, so we’ve no reason to do anything with it. But that isn’t a problem. When vehicles/200 is inserted, this will be fixed.

On the other hand, if we add just vehicles/200 to the database (with users/300 not being present), that is a change in a tracked document, which will cause us to index the referencing document (tickets/100) again and move us to this state:

When we will then add users/300, document tickets/100 will have the record of this reference and we’ll re-index it.

In other words, we are covered on both sides. Except, that there is still this pesky (and impossible) problem that the user is seeing.

Now, consider the following state of affairs, we are back in the initial state, both vehicles/200 and users/300 are missing in the database and tickets/20, vehicles/19 and users/99 are there.

We add vehicles/200 to the database, and there is a re-indexing process going on. At the same time that we re-index tickets/100 because of the new vehicles/200 document, we are adding the users/300 document in a separate transaction.

That means that during the indexing of tickers/100, we’ll see document vehicles/200 but not the users/300 document (even though it exists).

That is still not a problem, we’ll write the referencing record and on the next batch, detect that we have a user that we haven’t seen and re-index the document again.

Except… what if we didn’t update just the users/300 document in this case, what if we also updated users/99 at the same transaction (and after we insert document users/300).

Depending on the exact timings, we may end up missing document users/300 (because there was no reference to it at the time) but will notice that document users/99 was updated (we already had it referenced). Since users/99 was modified after users/300, we’ll record that we observed all the changes in the Users collection before users/99. That, crucially, also includes the users/300 that we never noticed.

This is confusing, I’ll freely admit. In order to reproduce this bug you need a non-standard pattern for creating references, a chain of at least two references, multiple independent references with different states, and an unlucky draw from Murphy with the exact timing of transactions, indexing and order of operations.

The root cause was that we recorded the newly added document reference in memory, and only updated them when the entire indexing batch was completed. During that time, there may have been multiple transactions that modified the documents. But because we didn’t sync the state until the end of the batch, we would end up missing this case. Solving the problem once we knew what was going on involved moving a single line of code from the outer loop to an inner one, basically.

Writing a reproducible test case was actually far harder, since so many things had to fall just so this would happen. I have to admit that I don’t have any strong conclusions about this bug. It isn’t something systematic or an issue that we missed. It is a sequence of unfortunate events with a very low probability of occurring that we never actually considered.

The really good thing about this issue is that it is the first one in this particular area of the code in quite some time. That means that this has been quite stable for many scenarios.

Woah, already finished? 🤯

If you found the article interesting, don’t miss a chance to try our database solution – totally for free!