That weird slow benchmark and its root cause

We care a lot about the performance of RavenDB, like a whole lot. To the point where we have a dedicated team that is continuously burning money CPU cycles testing out all sorts of scenarios with RavenDB. You can see the performance page on the website for some of their results. It got to the point where we stock NVMe drives at the office because we go through them often enough that we need them available for replacement. The benchmark must run, and the numbers must rise, after all.

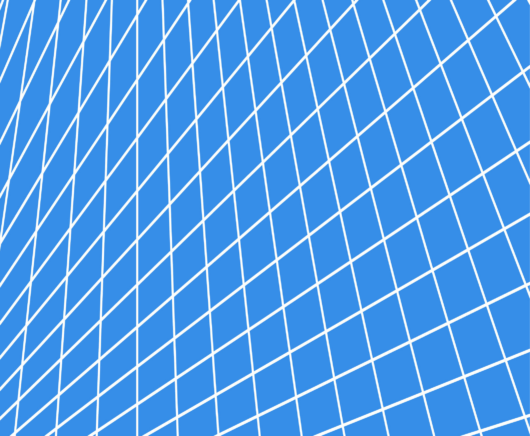

But the story today isn’t about the costs we pay to reach our performance goals. Rather, it is about a curious little snafu that we ran into when looking at the results. Here are the benchmark results, I intentionally stripped out everything that will give context to this story. What you can see is the same scenario being run on two identical machines, with the difference being the disk that is being used to host the database.

In this case, the blue line is io1 disk (high IOPS, low latency, and high costs) versus gp3 (reasonable IOPS, much higher latency, and lower costs). In this case, lower numbers are better.

If you’ll look at the benchmark, you can see that it makes complete sense. RavenDB is a database product, we are running a benchmark, and we use the disk. It’s predictable that the disk latency will have an impact on the performance of the benchmark.

Except… in this case, we are looking at a benchmark that is read-only, and it is meant to run completely from memory. We wrote it so the data size is less than the amount of memory on the system. So why do we have an impact of the disk at all in this case?

We even have a warmup phase before we actually start measuring, to ensure that everything is in memory. Something here does not line up.

After investigating deeper, we discovered the following:

- When running the automated benchmark, the performance was always correlated to the disk type.

- When running the same benchmark, manually, there was much better performance and no slowdown related to the slower disk.

That is a really annoying bug, because the fact that we are observing it somehow makes it go away? What is going on?

After a while, we finally figured it out. The problem was the warmup phase. Basically, the warmup is just running the benchmark itself, discarding the results.

Can you guess what the problem was?

The warmup phase is running when the system is cold (naturally), we were hitting the server with enough requests up front that it was unable to process them all (it was queuing on the disk). That meant that a very large portion of the requests in the warmup would timeout or just fail. When we started the benchmark phase, significant portions of the system were still on the disk. So the benchmark become a disk-bound test, with predictable results.

When we ran it manually, we would always do that after the benchmark already run, so our system would be warm (and fast, with no disk access).

The solution for the problem was to scale down the number of requests that the warmup phase is running, to allow gradual loading of the data to memory, without overloading the hardware.

A case where our expectations and what really happened did not line up, creating some… interesting surprises in the end result.

Woah, already finished? 🤯

If you found the article interesting, don’t miss a chance to try our database solution – totally for free!