This article is a thorough but accessible dive into the theory and rationale behind data compression algorithms and the way databases use them. We will compare the capabilities of a couple of different databases to find out how RavenDB is able to use compression so effectively.

Table of contents

- Introduction

- The Speed Paradox

- More Data In, Better Performance Out

- Compression in Document Databases

- The “Chunk” Compression Problem

- Uses of Chunk Compression

- The Zstd Compression Algorithm

- Dictionary Compression

- RavenDB’s Clever Use of Zstd

Table of contents

Introduction

If you had to guess the highest priorities of database designers, the ability to compress data would probably be high on your list. The more you can compress your data, the more of it fits on your disk – seems pretty essential. But you may have noticed that most of the popular database engines offer little or no data compression features out of the box. RavenDB is an odd duck in that regard. Why is that?

Well, the usefulness and efficiency of compressing data is very dependent on circumstance. For one thing, data storage space isn’t usually a limiting factor for most applications. The speed of the database is.

However, compression doesn’t have to come at the cost of speed, as you might expect: it can actually increase speed as well. As data is moved around, it passes through all sorts of bottlenecks that take much more time than compression and decompression. This includes things like I/O, or limited bandwidth.

So it saves a lot of time if you can make your data more compact before you move it around. If you’re on the cloud, it saves both time and money. There is also virtual memory to consider, which is more scarce than storage. So compression is useful for much more than just storage space – assuming it’s used appropriately.

There are other reasons that databases often don’t invest in compression capabilities. The performance of any compression algorithm is wholly dependent on the properties of the data itself. The circumstances and needs of the user can make a major difference. [De]compression has to be integrated seamlessly into the database’s operation.

And of course, many free and open source compression technologies exist and are in common use, like JPEG and GZIP, so databases don’t necessarily want to spend much effort reinventing the wheel. However, it is much easier on the user when the database they’re already using also offers powerful compression in the same package, rather than making them mix and match different tools ad hoc.

A database has to work smart in order to avoid working hard, and that means making compression a design priority from day one. RavenDB is such a database. It provides compression for many different scenarios and types of data. It is designed to get the best performance out of advanced algorithms. Compression is effortless and transparent to the user, rather than burdening you with figuring out compression trade-offs on your own.

In this article we will discuss how RavenDB compresses these types of data:

- Document collections

- Document revisions

- TCP and HTTP traffic

- Large text fields

- Map-reduce indexes

- Time Series data

The Speed Paradox

Data compression can either be a force multiplier for everything your application does, or it can be worse than useless. The most basic reason is that [de]compression comes at the cost of CPU. Besides CPU being a finite resource, this also means that compression takes some minimum amount of time.

Compression algorithms are judged by compression speed, decompression speed, and the compression ratio (calculated as the uncompressed size divided by the compressed size, a value of 1 or greater). There is a spectrum between high speed/low ratio algorithms and low speed/high ratio algorithms. Each algorithm is designed to occupy some niche in that spectrum.

Both at the ends of the spectrum, and in the activity of any given algorithm, there is a tendency to hit a barrier of diminishing returns. Speed can start to drop very fast the more you push for the best data compression ratio, and vice versa.

There is also the important difference between ‘lossless’ and ‘lossy’ algorithms. Lossy compression permanently alters the data – an example of this is the JPEG image format. RavenDB only uses lossless compression.

As mentioned above, extra storage space isn’t always worth the cost in speed. Storage is continuously getting cheaper and more plentiful – both because of the decreasing cost of a given GB of physical disk space, and because of the increasing availability of cloud storage. (Using RavenDB Cloud is a great way to take advantage of this.)

For many applications today the highest priority is the speed of the round-trip when users are communicating with the server. Every time you press a button on a website and have to wait for it to take effect, you’re hoping they aren’t wasting your time with unnecessary compression.

Of course, if your data needs to travel through a time consuming bottleneck, compression saves time by reducing the amount of data that needs to pass through it. This includes something as simple as writing and reading data to and from the disk. I/O per second (a.k.a. IOPS) is typically extremely slow compared to other processes. By the same token, on the cloud I/O time is usually a lot more expensive than CPU time.

What is true of I/O is also true of network bandwidth. This is why RavenDB compresses all TCP and HTTP traffic by default – both between RavenDB instances, and between server and client.

In contrast to all this, if you aren’t expecting your data to go through any significant bottlenecks, the compression time may well be the slowest part of your data pipeline.

More Data In, Better Performance Out

An algorithm’s potential efficiency is ultimately determined by the properties of the information you feed it. All algorithms depend on exploiting repetitive patterns in your data. This is also called data redundancy, and is closely related to information entropy.

Any pattern that is repeated many times can be summarized in something like the following way: you store just one copy of the repetitive pattern, and you record all the locations where the pattern is found in the uncompressed data. If a given pattern is repeated often enough, this can result in arbitrarily large compression ratios. If two chunks of information are of equal size, they are by no means equally compressible. A megabyte on your disk could contain random noise, or it could contain nothing but zeroes.

This rule of thumb generally holds: a large chunk of information tends to have more redundancy than any smaller piece of the same data. For example, if you look at most sentences in English (like this one) there might not be a single word that appears more than once. But as you increase your sample – even to just two or three sentences – you see a dramatic increase in redundancy.

It’s important to emphasize that this is not an iron law – compression performance does not always correlate with the amount of the data you feed it. Sometimes as you increase the amount of input you find yourself spending more and more CPU in exchange for smaller and smaller improvements in the compression ratio. In those cases, there might be a goldilocks zone between too little and too much input.

But when we’re talking about the kinds of data that people use day to day, it’s very likely that larger input results in higher compression ratios. In contrast, small chunks of data are usually less redundant, and that makes compression difficult. RavenDB’s document compression feature has a unique approach to small chunks – this is one of its biggest advantages. We discuss this further below.

Document Database Compression Strategies

Document databases are “NoSQL databases” – they’re different from the more common relational databases in that there is no rigid schema for data. A document is analogous to a row and the document’s fields are analogous to columns. Documents can be grouped in collections, which are roughly analogous to tables, but different documents in the same collection don’t have to have the same structure or even the same fields.

The relational schema lends itself to compression because the structure of a row is redundant across a whole table. But that doesn’t mean a document database has to be less efficient. You can choose to treat RavenDB like a relational database by creating a collection in which all documents have the same structure. In that case the redundancies are just as compressible as they would be in a schema. You can also choose to take advantage of NoSQL freedom and leave fewer redundancies to compress, or you can do anything in between.

Furthermore, document databases like RavenDB usually store data as JSON. This is a common data format that is used for many other applications, such as TCP requests and responses. This is convenient because the brackets, quotation marks, commas, and other text elements used in the format are highly redundant.

The “Chunk” Compression Problem

Returning to the previous topic: if we want to increase the amount of input data, we face a problem. A limitation of many algorithms is that they process data in indivisible chunks. A chunk of information is compressed all at once, and must also be decompressed all at once.

The result is that if you compressed 10 kilobytes of data all at once, but now you only want to decompress one kilobyte, you’re out of luck. You have to pay the cost of decompressing all 10 kB in CPU and other resources. There is no universal term for this type of compression, I am calling it “chunk” compression here. Another way to think of it would be “isolated” compression.

The reason is that with many algorithms, there is not necessarily a one to one correspondence between a specific part of the compressed data and a specific part of the uncompressed data. One part of the compressed data might have a partial relationship with a bunch of different parts of the uncompressed data. Likewise, different parts of compressed data might depend on each other in order to be interpreted correctly during decompression.

Uses of Chunk Compression

Chunk compression is limited, but useful. In many databases, chunk compression is all they are able to offer. An example is the popular database MySQL. MySQL has native compression, but only in chunks called “pages”. These pages can be 1, 2, 4, 8, or 16 kilobytes. This means that you can’t take advantage of any redundancies that only appear on a scale larger than 16 kilobytes.

The assumption behind this feature is that there are limited situations in which you really want to decompress more than 16 kb at a time. Also, there might be diminishing returns for trying to compress more data at a time, as mentioned above. Therefore, MySQL’s data compression is flawed, but it might be adequate for many applications.

RavenDB makes good use of chunk compression in a few specific cases: field compression, map-reduce index compression, and time-series compression.

When you store a lot of text in one field, RavenDB automatically compresses it. The great thing about this is that it is not necessary to decompress a field in order to read or modify all of the other fields in the document. Even the name of a compressed field can be accessed separately. This works seamlessly with RavenDB’s out of the box full-text search and indexing capabilities.

Map-reduce indexes are a special type of index that allow you not only to query your data, but to perform fast complex aggregation queries. Map-reduce data is highly redundant, so RavenDB compresses all map-reduce indexes automatically with very high compression ratios.

Similarly, RavenDB’s time-series feature compresses data using Gorilla compression and the LZ4 algorithm. Time-series data consists of series of numerical values (entries) each associated with a time stamp. They can be highly compressible because you can choose to only store the differences between consecutive entries, rather than recording each entry separately. In fact, RavenDB is typically able to record an entry in just 4 bits, and that’s before the actual compression.

But when necessary, RavenDB uses Zstd to circumvent the problems of chunk compression.

The Zstd Compression Algorithm

Zstandard, or Zstd, is an open source algorithm developed a few years ago for Facebook. Like all members of the LZ family of algorithms, it is lossless. It scores very high in both speed and compression ratio. With 22 possible ‘levels’ of compression, it occupies a wide range in the middle of the speed-ratio spectrum. Its performance is similar to if not better than many other algorithms in the same parts of the spectrum.

Zstd is especially good at decompression speed. Usually, decompression speed increases roughly in proportion to compression speed, and decreases in proportion to compression ratio. In Zstd decompression speed is very high and relatively constant across different compression levels. This makes Zstd especially helpful in all scenarios in which the speed of reads is the highest priority.

But by far the most interesting aspect of Zstd compression is its dictionary training feature. All LZ algorithms use dictionaries, but they use them for chunk compression – one dictionary per chunk. Zstd revolutionizes compression by training dictionaries on sample data, and then freeing that dictionary to be used flexibly to compress any data you like. It even allows you to decompress smaller parts within a compressed chunk.

Dictionary Compression

To understand how dictionaries work we need to understand how compression works without a dictionary.

In common types of compression, compressed data is arranged in the same order as the decompressed data. It consists of pieces of data in the raw format. These pieces contain any non-redundant information, plus the single copies of redundant sequences. In between those pieces are the references to redundant sequences – and these point to those same raw pieces of data.

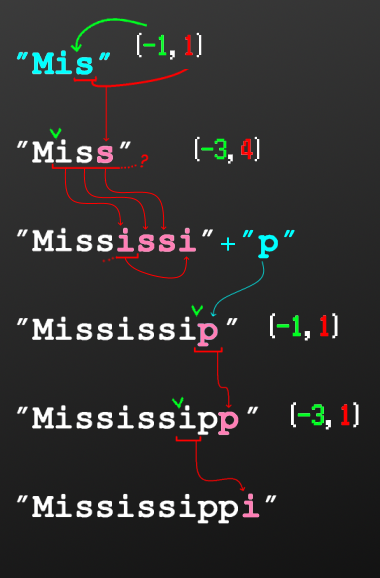

Let’s compress the word Mississippi. It comes out looking something like this:

“Mis” (-1,1) (-3,4) “p” (-1,1) (-3,1)

How do we decompress this? Start with the first text in quotes, then add more text according to the parentheses. The first number in the parentheses tells you how far to go back to find the redundant part, and the second number tells you how long that redundant part is.

The first parentheses are at the end of “Mis”. They tell you to go one character back, to “s”, and to only repeat one character, that same “s”. Now we have “Miss”. Next, we go three characters back, to “i”, then four characters forward. Those are “i”, “s”, “s”… how do we get a fourth character? In this format, we can continue to the new “i” that we have just added. This gives us “Mississi”. Then add “p”, and so on.

The compression ratio here isn’t great because the repeated sequences are very short, but don’t worry about that right now. What does all of this tell us? That in this simple approach to compression, pieces of the raw data are used as the repository for redundant sequences, leading to this complex recursive pattern we’ve just analyzed.

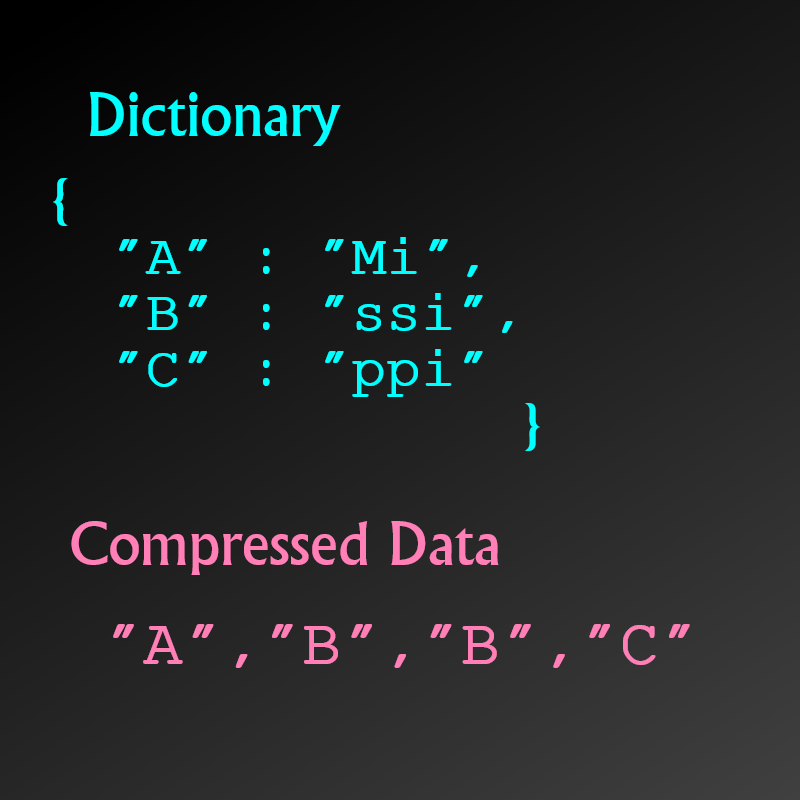

A dictionary consists only of a repository of redundant sequences. It is not part of the compressed data per se. So for example, if we were to create a dictionary for Mississippi, we can record the sequence “ssi” or “iss”. This is a little simpler than what we did before: we had to use one reference to create the sequence “iss”, and only then could the second reference point to it. With a dictionary Mississippi can now be compressed something like this:

Rather than references having to point to the pieces of data that precede that reference, they are free to point to any part of the dictionary. Dictionaries can be very efficient, but it is Zstd’s dictionaries that reach the full potential of dictionary compression. Zstd separates the dictionary from the compressed data entirely. That way, a single dictionary can be used to compress a kilobyte, or a terabyte.

It also finally frees us from chunk compression, because references don’t depend on other parts of a compressed chunk, they point to something external.

RavenDB’s Clever Use of Zstd

We can finally talk about RavenDB’s Documents Compression feature. RavenDB is not the only database that uses Zstd. It isn’t even the only document-oriented database that uses Zstd – MongoDB does as well. However, more than two years after they introduced Zstd in version 4.2, MongoDB still isn’t making use of Zstd’s dictionary training capabilities.

RavenDB applies compression to one collection of documents at a time. RavenDB trains Zstd on the first few documents to create a dictionary, and that dictionary is then used to compress all further data that enters the collection.

Better yet, the database monitors the compression ratio of new documents. If the ratio falls below a certain threshold, RavenDB automatically trains a new dictionary on the most recently modified documents in the collection. If this new dictionary performs better than the last one, it replaces it. Thus data entering the collection continues to be compressed with no interruption, and with a high ratio.

This entire process is transparent to the user. All you need to do is choose which collections to compress, and RavenDB does the rest. Zstd compression can also be applied to all document revisions in the database. Revisions are a record of every change made to every document in a database. They provide a way to track your data’s past, allowing you to restore old data or recover from an unexpected server failure. By training Zstd on the revisions in one database, high compression ratios can be achieved for this important but less often accessed part of your data.

Conclusion

Hopefully this article gave you some insight into compression, both in general and in the context of database engines. And now you understand how RavenDB is able to provide highly efficient compression for a wide range of scenarios, all with minimum fuss. It is the only major database that has been so carefully designed with compression in mind. You can also see how a database’s choice of algorithm can be crucial: both to its own capabilities, and to how the user can take advantage of it.

There are many free compression technologies available. But whenever you add something to your stack it can easily result in unexpected compatibility issues. If you choose your database wisely, you won’t have to figure out compression on your own, or pay through the nose for cloud resources. RavenDB’s mission in life is to give you ease and peace of mind. You can focus on your product, knowing that the data is well taken care of at the backend.

If you’d like to see how RavenDB can support your application, click here to book a free demo.

Woah, already finished? 🤯

If you found the article interesting, don’t miss a chance to try our database solution – totally for free!