Optimizing Corax: Optimizing tree operations

I love trees. Not the ones that produce oxygen, I mean, I guess they are nice too, but I’m talking about trees in software. To be rather more exact, I’m fascinated by B+Trees and all their permutations. In a very real sense, most databases are all about B+Trees. To give you some context, Voron alone, at this time, has the following:

- Tree – the core used to map an arbitrary byte string key to an arbitrary sized value. This supports many operations, including compressed values, overflow pages, etc. Typically used to implement storage indexes over non-numeric data.

- Fixed Size Tree – a more compact implementation when more data is known, with int64 key and a fixed size value (which may be zero). Typically used to implement storage indexes over numeric data.

- Multi Tree – a tree whose keys are arbitrary byte string keys and whose value is itself a tree (with no value) that can contain multiple values for a single entry. Used to implement non-unique storage indexes.

- Compact Tree – a tree for arbitrary byte string keys to an int64 value. The keys are compressed and we can pack a lot of values in a single page. Used to handle the field terms in Corax.

- Set – a tree used to efficiently store int64 values in an ordered manner without duplicates. The data is heavily compressed and is used to handle large posting lists in Corax.

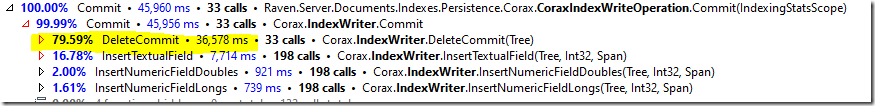

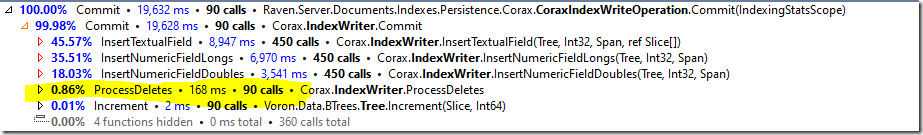

And I’m probably forgetting a few. Those are all various permutations on a B+Tree. And together they create a really interesting set of capabilities for Voron. Today I want to talk to you about an issue we ran into when building Corax, in particular, this one:

Before we dive into exactly what is going on here, I feel that I need to give some background. Corax is an indexing engine, and at its core is an inverted index. When we need to delete an entry, we need to remove references to it from all the terms that it is a part of. The code producing the above is implemented roughly like this:

That particular piece accounts for almost 80% of the indexing commit time (and a significant fraction of the total indexing time). That is… just the way it is, I’m afraid. We have to process things in this manner. Lucene does things differently, it will mark the entry as deleted (and then ignore it afterward) eventually merging the results away. I really didn’t want to go in that route. One of the key design principles for Corax is that we want to avoid deferred payments, we want to pay everything in “cash” right now.

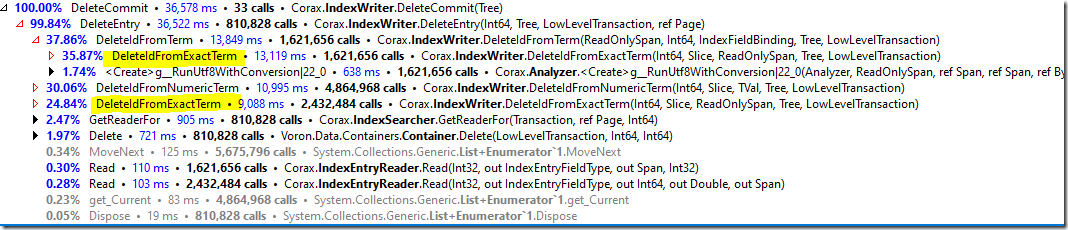

If we look deeper into the DeleteCommit() function, we can see some interesting details:

The DeleteIdFromExactTerm() is the piece that is doing the majority of the work, accounting for over 60% of the runtime for processing deletes. Let’s dig deeper here:

And we can see that this is actually really fast. The total cost for invoking this method is 5.5 μs. The problem is that we invoke it 4 million times.

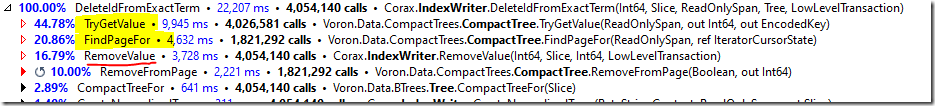

And looking into the actual costs, what is the issue? It is the TryGetValue() and FindPageFor() calls, those scan through the tree structure to find the relevant place from which to remove the value.

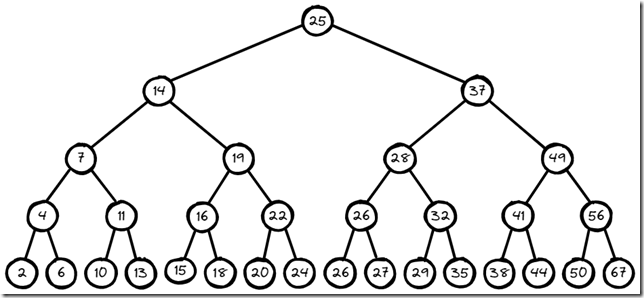

As a reminder, here is a tree structure, (it isn’t a B+Tree, mind, because we don’t need that to understand what is going on). What we have is a lot of calls to Remove() on this tree, and we always need to start searching from the root. It turns out that this is quite expensive:

Consider what happens if I want to remove 15 and 20. I have to compare 25, 14, 19, 16 and15. Then I have to go back to the root and compare 25, 14, 19, 22 and 20. There are a lot of commonalities here that I can take advantage of. If I can operate locally, I can probably do better, no?

We rewrote the delete code to be more like this:

The idea is that we do the deletion of terms in two stages. First, we gather all the ids we need to delete for all the terms from all the entries that are being deleted. Then we sort those values, and then we invoke a batch delete method.

Unlike the individual RemoveValue() calls, we can now take advantage of the structure of the tree. In the case of wanting to remove [15,20], we can scan the tree (25,14, 19, 16, 15) to get to the first item that we remove. Then we proceed using the tree’s own structure. So deleting 20 means comparing (16, 19, 22, 20). In this case, we saved one operation, which isn’t that meaningful. But B+Tree’s most beautiful property is that they are dense. In this case, we are removing values from posting lists, which may contain millions of entries, and it isn’t uncommon to be able to pack thousands of entries per page. That means that the savings are huge.

This particular optimization was sufficient to ensure that we just don’t need to care about deletes any longer.

The ability to apply the operation in a batch fashion meant that we could amortize all of the setup costs, which lead to a far greater reaction than I actually expected, to be honest.

The code in question can make a lot of assumptions about what is going on (the input is sorted, values are present in the tree, etc). That makes things so much easier.

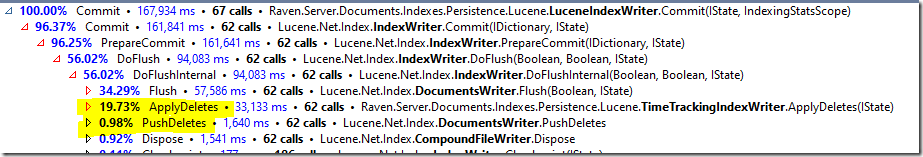

As a result, Corax can keep paying all costs upfront and still be so much faster than Lucene (who defers things to the background). To give some context, here are the relevant costs for Lucene:

Lucene spends more time processing deletes than Corax does for the whole commit process ![]() . Note that this is just a small part of the costs that deletes have for Lucene, there is an excellent blog post describing those from Elastic. The costs range between 20% – 46% slowdown in queries! That is an important reason to avoid deferring work, I think we can agree.

. Note that this is just a small part of the costs that deletes have for Lucene, there is an excellent blog post describing those from Elastic. The costs range between 20% – 46% slowdown in queries! That is an important reason to avoid deferring work, I think we can agree.

Woah, already finished? 🤯

If you found the article interesting, don’t miss a chance to try our database solution – totally for free!