RavenDB and multi region setup

RavenDB is a distributed database. You can run it on a single node, in a cluster on a single data center on as a geo distributed cluster. Separately, you can also run RavenDB in a multi master configuration. In this case, you don’t have a single cluster spanning the globe, but multiple cooperating clusters working together. The question is, when should I use a geo distributed cluster and when should I setup a multi master configuration with multiple coordinating clusters?

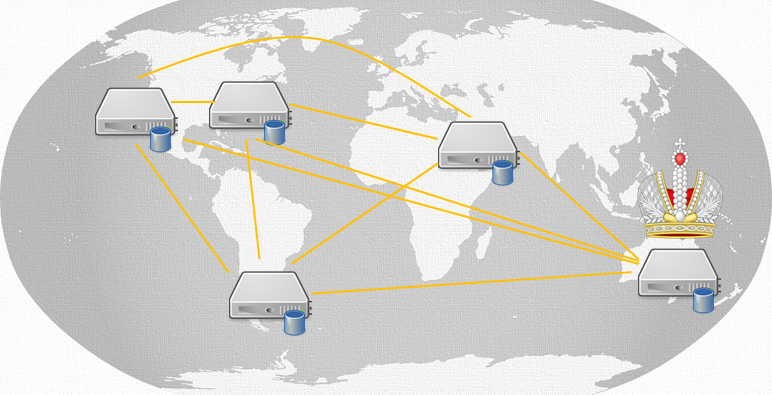

Here is an example of a global cluster:

As you can see, we have nodes all over the world, all joined into a single cluster. In this mode, the nodes will select a leader (denoted by the crown) which will manage all the behavior of the cluster. To ensure that we can properly detect failures, we setup a timeout interval that is appropriate for the distances involved. Note that even in this mode, most of the actual writes to RavenDB will be done on a pure node-local basis and gossiped between the nodes. You can select the appropriate level of write assurance that you want (confirm after it was written to two additional locations, for example).

Such a setup suffer from one issue, coordinating across that distance (and latencies) means that we are need to account for the inherent delay in the decision loop. For most operations, this doesn’t actually impact your system, since most of the work is done in the background and not user visible. It does mean that RavenDB is going to take longer to detect and recover from failures. In the case of the setup in the image, we are talking about the difference between less than 300ms (the default when running in a single data center) to detecting failure in around 5 seconds or so.

Because RavenDB favors availability, it usually doesn’t matter. But there are cases where it does. Any time that you have to wait for a cluster operation, you’ll feel the additional latency. This applies not just to failure detection but when everything is running smoothly. A cluster operation in the above setup will require confirmation from two additional nodes aside from the leader. Ping time in this case would be 200 – 300ms between the nodes, in most cases. That means that at best, any such operation would be completed in 750ms or so.

What operations would this apply to? Creation of new databases and indexes is done as a cluster operation, but they are rarely latency sensitive. The primary issue for this sort of setup is if you are using:

- Cluster wide transactions

- Compare exchange operations

In those cases, you have to account for higher latency as part of your overall deployment. Such operations are inherently more expensive. If you are running in a single data center, with ping times that are usually < 1 ms, that is not very noticeable. When you are running in a geo distributed environment, that matters a lot more.

One consideration that we haven’t yet taken into account is what happens during failure. Let’s assume that I have a web application deployed in Brazil, which is aimed at the local RavenDB instance. If the Brazilian’s RavenDB instance decide to visit the carnival and not respond, what is going to happen? On the one hand, the other nodes in the cluster will simply pick up the slack. But for the web application in Brazil, that means that instead of using the local instance, we need to go wide to reach the alternative nodes. GitHub had a similar issue, but between east and west coast and the additional latency inherent in such a setup took them down.

To be honest, beyond the additional latency that you have with cluster wide operations in such a setup, I think that this is the biggest disadvantage of such a system. You can avoid that by running multiple nodes in each location, all joined into a big cluster, of course. Then you can set things up that each client will use the nearest nodes. That gives you local failover, but you still need to consider how to handle total outage in one location.

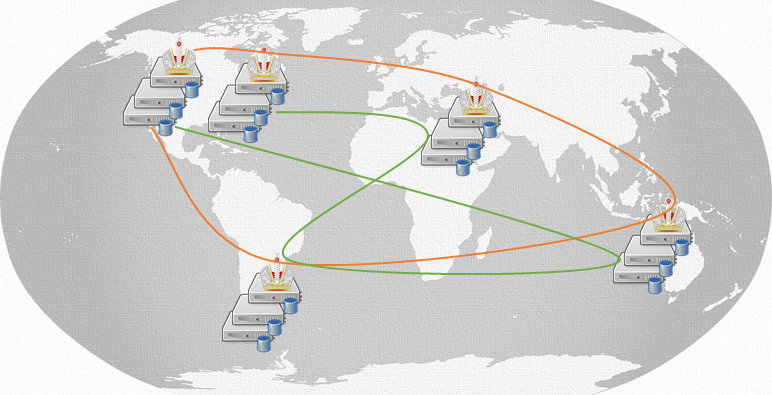

There is another alternative, in which case you have separate clusters in each location (may be a single instance, but I’m showing a cluster there because you’ll want local high availability). Instead of having a single cluster, we set things up so there are multiple such clusters. Then we use RavenDB’s multi-master capabilities to tie them all together.

In this setup, the different clusters will gossip between themselves about the data, but that is the only thing that is truly shared. Each cluster will manage its own nodes and failover and work is done only on the local cluster level, not globally.

Other things, indexes, ETL, subscriptions etc are all defined at the cluster level, and you’ll need to consider whatever you’ll have them in each cluster (likely for indexes, for example), or only on a single location. Something like ETL would probably have a designated location that would be pushing the data to its destination, rather than duplicated in each local cluster.

The most interesting question, however, is how do you handle cluster wide transactions or compare exchange operation in such an environment?

A cluster wide transaction is… well, cluster wide. That means that if you have multiple clusters, your consistency scope is only within a single one. That is probably the major limit for breaking apart the cluster in such a system.

There are ways to handle that, of course. You can split your compare exchanges so they will have a particular cluster that will own them, in this manner, you can direct certain operations to a particular cluster regardless of where the operation originated. In many environments, this is already something that will naturally happen. For example, if you are running in such an environment to deal with local clients, it is natural to hold their compare exchange values for the cluster they are using, even if the data is globally replicated.

Another factor to consider is that RavenDB replicates documents and their data, but compare exchange values aren’t considered in this case. They are global to the cluster, and as such aren’t sent via replication.

I’m afraid that I don’t have one answer to the question how to geo distribute your RavenDB based system. You need to account for several factors about your application, your needs and the system as a whole. But I hope that you’ll now have the appropriate background to make an informed decision.

Woah, already finished? 🤯

If you found the article interesting, don’t miss a chance to try our database solution – totally for free!