Searching through text: Part II, Exploring posting lists persistence

In full text search terminology, a posting list is just a list of document ids. These are used to store and find matches for particular terms in the index.

I took the code from the previous post and asked it to give me the top 50 most frequent terms in the dataset and their posting lists. The biggest list had over 200,000 documents, and I intentionally use multiple threads to build things, so the actual list is going to be random from run to run (which adds a little more real-worldedness to the system*).

*Yes, I invented that term. It make sense, so I’m sticking with it.

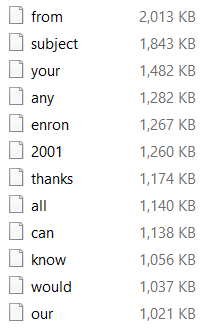

I took those posting lists and just dumped them to a file, in the simplest possible format. Here are the resulting files:

There are a few things to note here. As you can see, the file name is the actual term in the index, the contents of the file is a sorted list of int64 of the document ids (as 8 bytes little endian values).

I’m using int64 here because Lucene uses int32 and thus has the ~2.1 billion document limit, which I want to avoid. It also make it more fun to work with the data, because of the extra challenge. The file sizes seems small, but the from file contains over 250,000 entries.

When dealing with posting lists, size matter, a lot. So let’s see what it would take to reduce the size here, shall we?

Simply zipping the file gives us a massive space reduction, so there is a lot left on the table, which is great.

Actually, I might have skipped a few steps:

- Posting lists are sorted, because it helps do things like union / intersect queries.

- Posting lists are typically only added to.

- Removal are handled separately, with a merge step to clean this up eventually.

Because the value is sorted, the first thing I tried was to use a diff model with variable sized int. Here is the core code:

Nothing really that interesting, I have to admit, but it did cut the size of the file to 242KB, which is nice (and better than ZIP). Variable sized integers are used heavily by Lucene, so I’m very familiar with them. But there are other alternatives.

- StreamVByte is a new one, with some impressive perf numbers, but only gets us to 282 KB (but it is possible / likely that my implementation of the code is bad).

- FastPFor compresses the (diffed) data down to 108KB.

- RoaringBitmap gives us a total of 64KB.

There are other methods, but they tend to go to the esoteric and not something that I can very quickly test directly.

It is important to note that there are several separate constraints here:

- Final size on disk

- Computational cost to generate that final format

- Computation cost to go from the final format to the original values

- How much (managed) memory is required during this process

That is enough for now, I believe. My next post will deal delve into the actual semantics that we need to implement to get a good behavior from the system. This is likely going to be quite interesting.

Woah, already finished? 🤯

If you found the article interesting, don’t miss a chance to try our database solution – totally for free!