Import from SQL

-

SQL Migration allows you to import data from an SQL database to RavenDB.

-

SQL sources that data can be imported from currently include:

- MySQL

- SQL Server

- Oracle

- PostgresSQL

-

A semi-automatic import process resolves the original business model, taking into account SQL relations represented by linked foreign and primary keys and identifying one-to-one, one-to-many, and many-to-one relations.

-

Primary/Foreign keys must be defined in the source SQL database so the migrator would be able to perform correctly.

-

The SQL Migrator attempts to detect and automatically suggest the best data model.

Changes made while preparing the data model maintain data integrity and cohesion. -

In this page:

Import Data From SQL Source

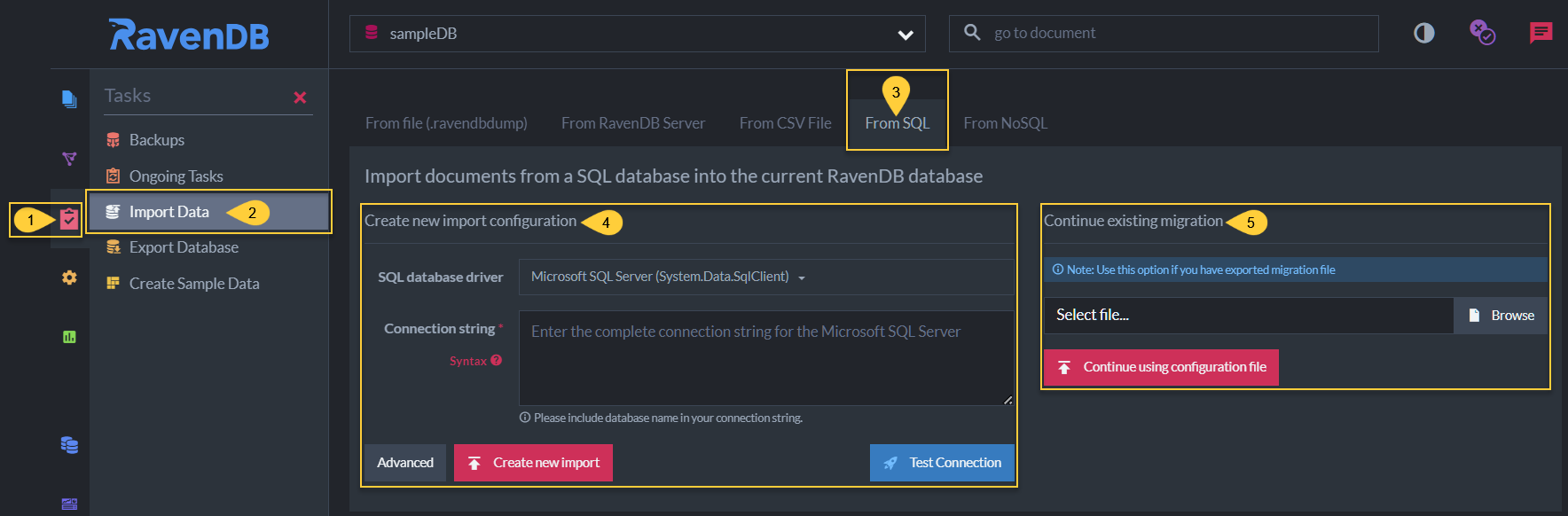

From SQL Tab

- Tasks

Click to open the Tasks menu. - Import Data

Click to import data. - From SQL

Click to import data from an SQL source. - Create new import configuration

Create a new import configuration from scratch.

The configuration can be exported and reused. - Continue existing migration

Run an existing import configuration.

Create a New Import Configuration

Use this option to enter a new import configuration.

The configuration can then be exported and reused.

Create New Import Configuration

-

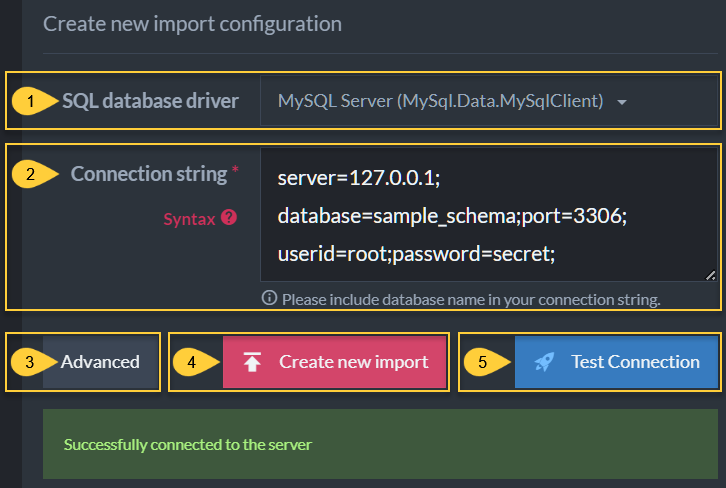

SQL database driver

Select one of the available database drivers:- Microsoft SQL Server (System.Data.SqlClient)

- MySQL Server (MySql.Data.MySqlClient)

- MySQL Server (MySqlConnector.MySqlConnectorFactory)

- PostgreSQL (Npgsql)

- Oracle Database (Oracle.ManagedDataAccess.Client)

-

Connection string

Provide a connection string to the data origin server.

E.g., the connection string for a local mySQL database can be:

server=127.0.0.1;

database=sample_schema;port=3306;

userid=root;password=secret;

Click the Syntax caption to display valid connection strings for the different SQL sources.

-

Advanced

Click for advanced import options. -

Create new import

Click to connect the SQL source and import the database schema and data. -

Test Connection

Click to validate the connection string and verify connectivity to the data source.

Successful Connection

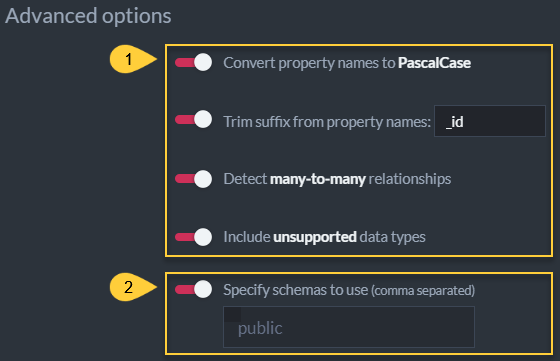

Advanced Import Options

Advanced Import Options

-

These advanced import options are available for all import types.

- Convert property names to PascalCase

Property names can be converted to PascalCase.

E.g.ZIP_CODEis converted toZipCode - Trim suffix from property names

Suffix can be removed from property name.

E.g.ADDRESS_IDbecomesAddress. - Detect many-to-many relationships

Many-to-many relationships can be detected.

If all table columns are defined as foreign keys, the relationship is identified as many-to-many.

To allow efficient modeling of 2-way relationships, Such tables are not imported and both sides of the relationship are linked. - Include unsupported data types

- Convert property names to PascalCase

-

This advanced import option is available only when importing data from a PostgresSQL server.

- Specify schemas to use (comma separated)

By default, RavenDB will attempt to import data from a PostgresSQL server using the Public schema.

To continue this behavior keep the above configuration option disabled or provide no alternative schema name in the input area.

To alter this behavior and import data using other schemas, enable the above configuration option and provide schema names (comma separated, e.g.public.info_schema,public.prod_schema) in the input area.

- Specify schemas to use (comma separated)

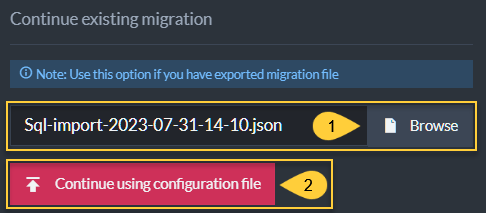

Run an Existing Import Configuration

If you have previously created and saved an import configuration, you can locate and run it here.

Use Existing Import Configuration

- Click to locate an existing import configuration on the file system.

- Click to run the selected configuration and migrate data from the SQL source.

SQL Migration

The NoSQL data model allows nested arrays/objects, where SQL uses additional

tables to render such relations, that are often unrelated to the natural data form.

The nested items satisfy third normal form (3NF),

e.g. by embedding OrderLines within an Order object to solve a potential select n+1 problem.

SQL also uses artificial tables to represent many-to-many relationships.

In NoSQL, this can be modeled as a list of foreign object IDs and requires no extra table/collection.

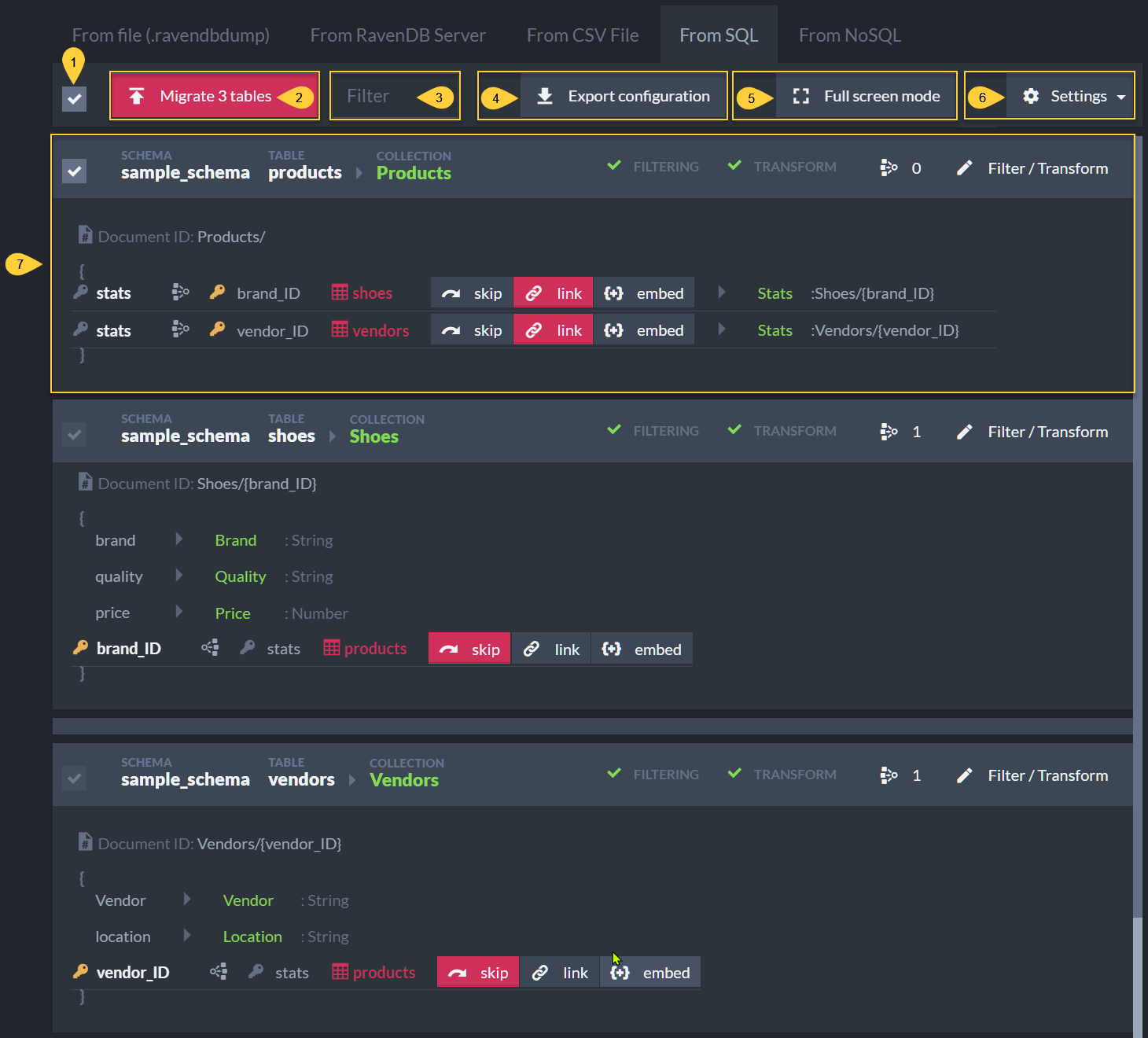

Data Conversion: General Settings

When an import configuration is executed RavenDB connects the SQL source, obtains information about database tables and keys, and displays the following view to allow the user to alter the migration and target documents structure.

Migration Tuning

-

Select Tables

Toggle ON/OFF to select the tables you want to migrate -

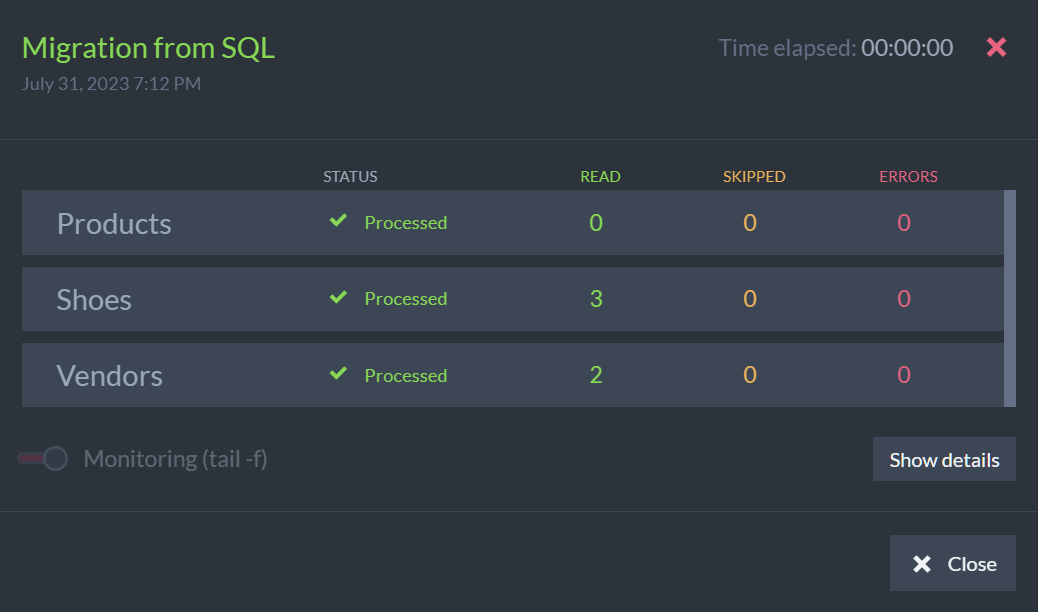

Migrate Tables

Click to migrate the selected tables and display a concise execution summary.

Execution Summary

-

Filter

Filter tables by name -

Export configuration

Click to export the configuration to a file for future use or fine tuning -

Full screen mode

Click to toggle full-screen mode -

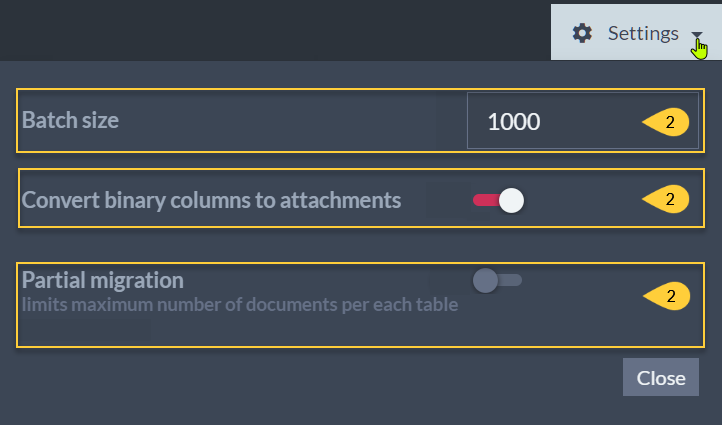

Settings

Click to set additional import configuration settings

Migration Settings

-

Batch Size

Enable to change batch size of document insertion -

Convert binary columns to attachments

Enable to add binary content to target documents as attachments.

Disable to add binary content target documentd asbase64 strings. -

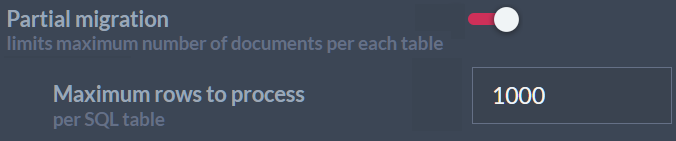

Partial migration

Enable to limit the number of items imported per table.

Partial Migration

Please note that limiting the migration may break links between documents.

-

Batch Size

-

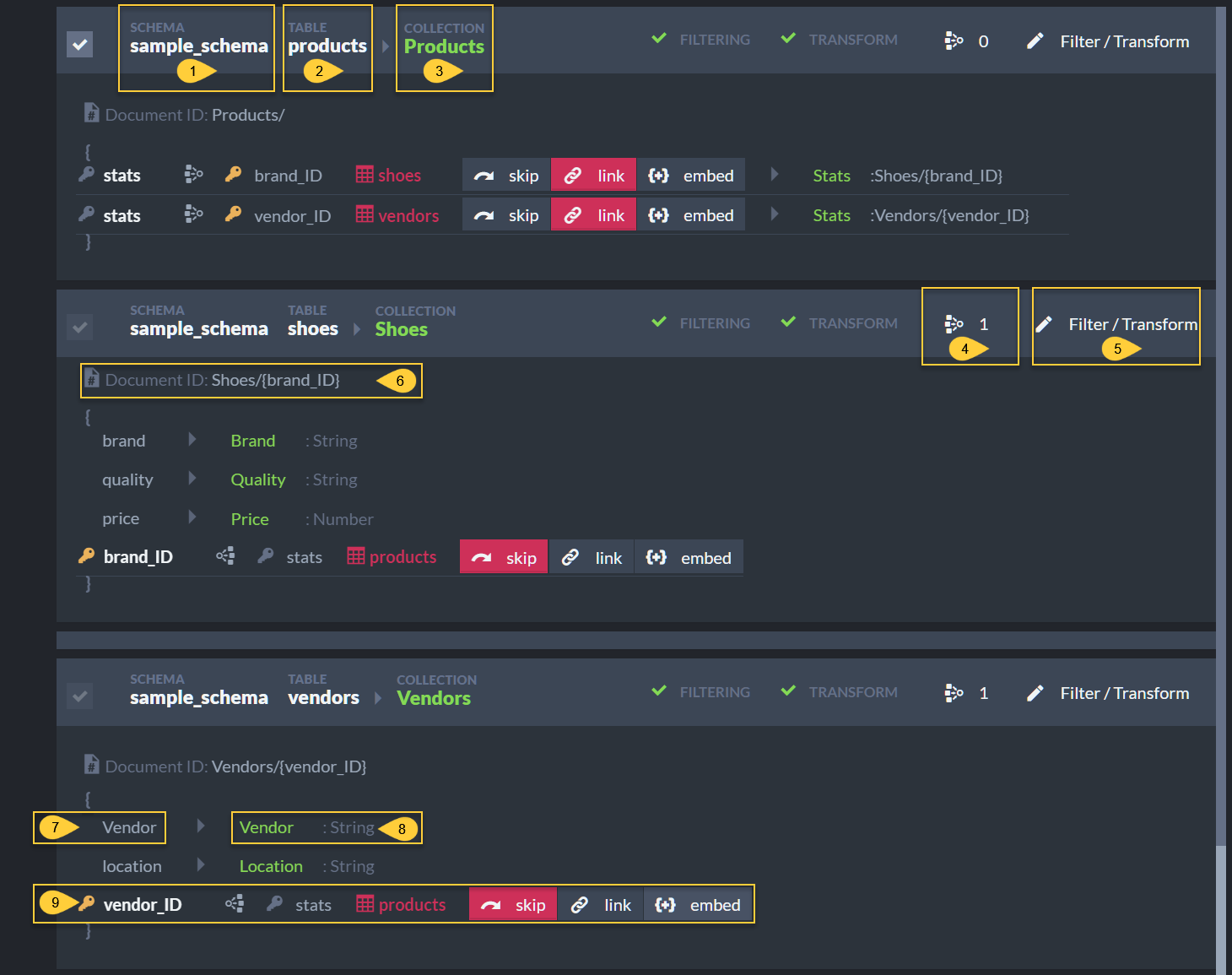

Table/Collection Conversion Tuning

Specific details related to the transfer of each table can be altered as well.

See additional details below.

Data Conversion: Table/Collection Settings

Data Conversion Tuning

-

Schema

Source schema -

Table

Source table -

Collection

Target collection

Click to alter the collection name before the conversion -

Show Incoming Links

Shows a relationships count for this table.

Click for details regarding each relationship, and to select whether to skip each relationship or embed it in the document. -

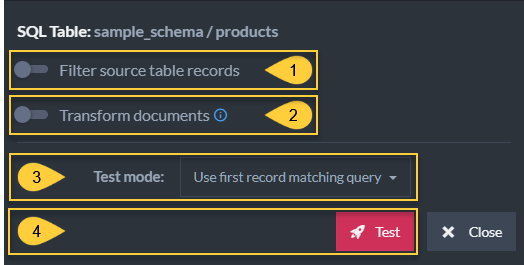

Filter/Transform

Click to alter the filter query executed over the source database, and/or the transform script used to shape the imported data. -

Document ID

The ID given to the new document, and its origin in the source database. -

SQL Column Name

The name of the SQL column the value is taken from. -

Document Property

The new document property name and type.

Click to alter the property name. -

Relationships strip

This strip summarizes relationships identified in the incoming data.

Hover above the displayed items for additional information.

Filtering and Transforming Documents

The migration process offers its users full control over the

import procedure by allowing them to comfortably filter

the data it reads from the SQL database and easily transform

the data it writes to RavenDB.

Filtering the read data is gained by altering the query RavenDB

runs over the SQL server to fetch the data.

transforming written documents is done using a transform script

through which all incoming data passes.

Learn here how to connect the source database and open the filter/transform dialog.

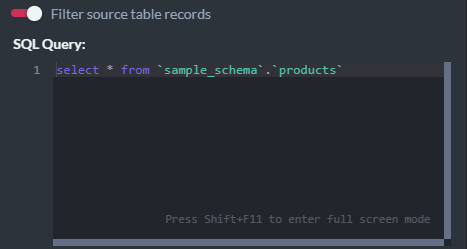

Filter and Transform

-

Enable this option to open the filter editor.

Filter Query

Data rows are read from the source SQL database by executing the query defined in the filter section.

A where SQL statement can be included in the query to filter the data as you please.

E.g. -

select * from `shop`, `orders` where year(Date) >= 2022 -

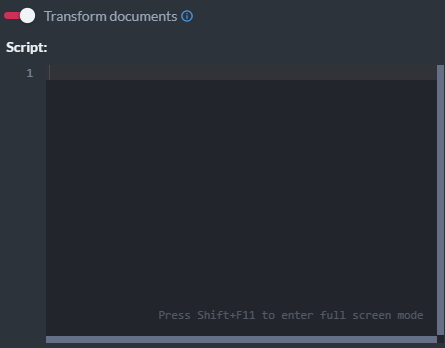

Enable this option to open the transform-script editor.

Transform Script

The added transformation script can be used to monitor all or any part of the imported data and shape the final documents as you please.

E.g. -

var linesCount = this.OrderLines.Length; if (linesCount < 3) { throw `skip`; // use this statement to skip a given document } this.LinesCount = linescount; where year(Date) >= 2022 -

select a test mode to test your filter and/or transform script before actually importing the data from the source DB to RavenDB.

Transform Script

-

Use first record matching query

This mode runs a query defined in the filter section and gets the first result. -

Specify primary key values to use

With this mode the user is presented with extra fields to provide value for each primary key column.

-

Use first record matching query

Handling Relationships

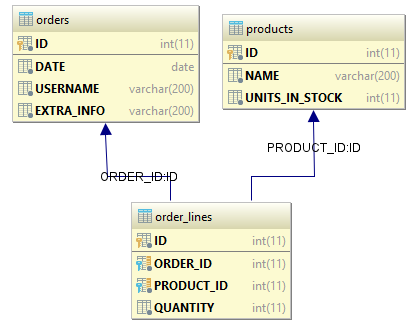

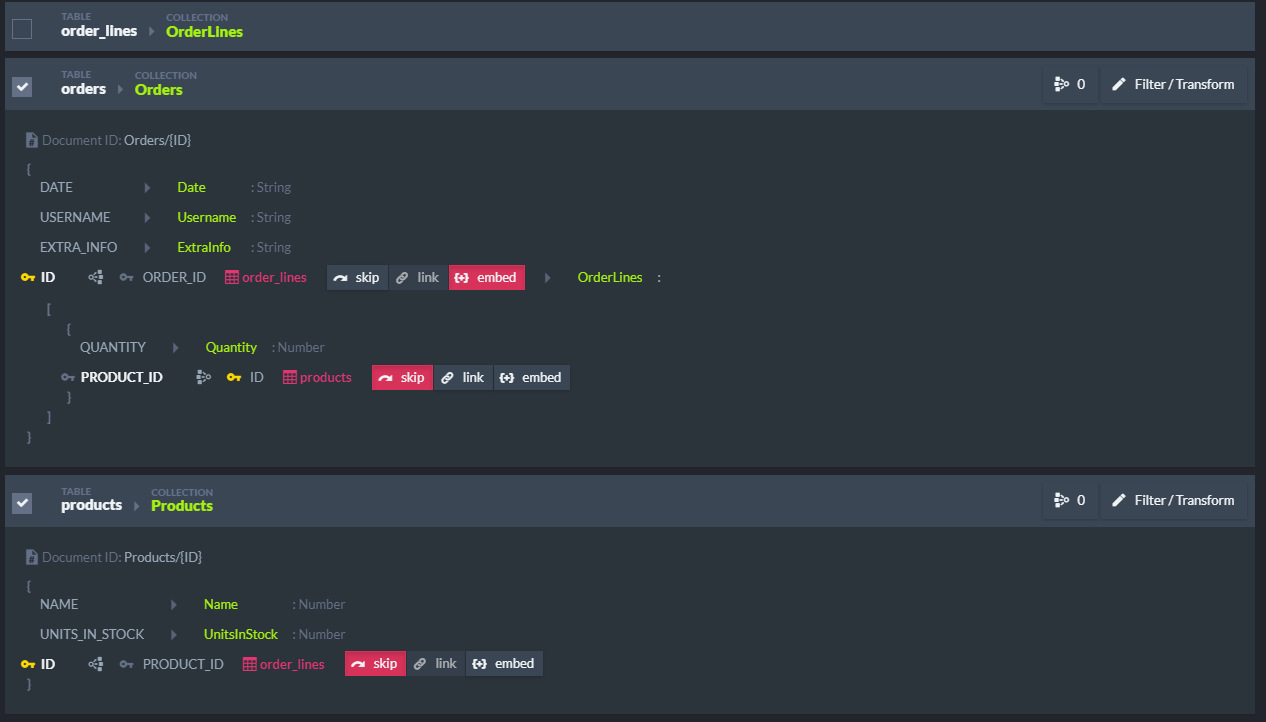

Let's use an SQL database with the following tables as an example.

Database ERD (Entity Relationship Diagram)

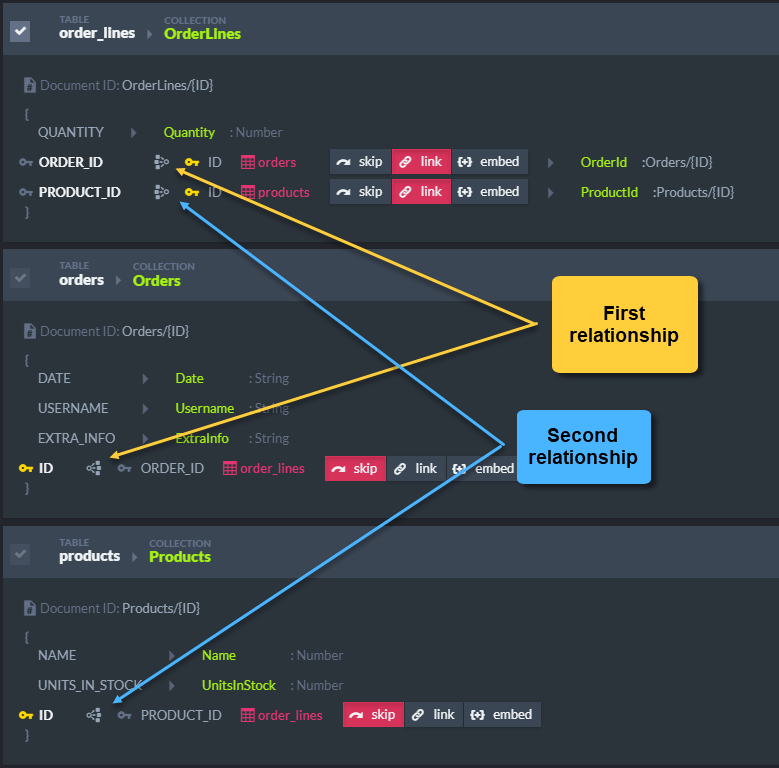

The database contains 2 relationships, which can be represented as additional document properties.

SQL Migration - Relationships

- The OrderLines document will be given an OrderId property (with the value Orders/{ID}).

This is a linking property. - On the other side of the relationship skip mode is applied, causing this document property to be skipped.

These settings will present us with a document with the following structure:

-

OrderLine

{ "Quantity": 10, "OrderId": "Orders/1", "ProductId": "Products/2" } -

Order

{ "Date": "2018-05-05", "Username": "accountName", "ExtraInfo": "Please call before delivery" } -

Product

{ "Name": "Phone", "UnitsInStock": 4 }

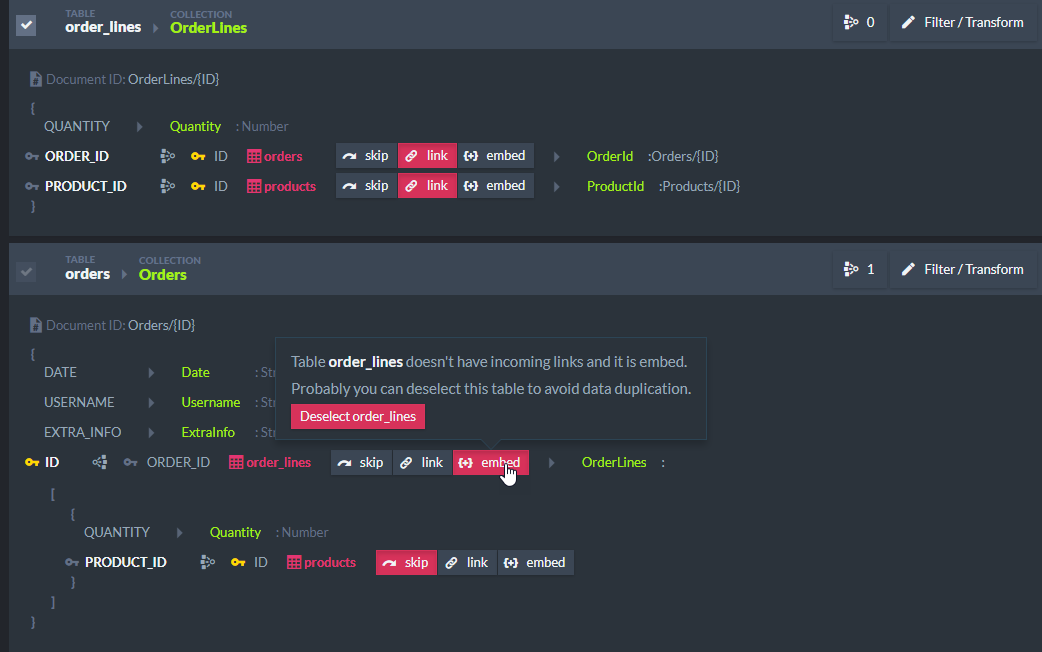

If we embed OrderLines in Orders and uncheck the OrderLines table (as we don't need this artificial collection), we end up with the following structure:

SQL Migration - Relationships

-

Order

{ "Date": "2018-05-05", "Username": "accountName", "ExtraInfo": "Please call before delivery", "OrderLines: [ { "Quantity": 10, "ProductId": "Products/2" }] } -

Product

{ "Name": "Phone", "UnitsInStock": 4 }

The target document structure is determined by the relationship and selected mode.

-

One-to-many relationship

The source table contains a primary key

The target table contains a foreign key- Mode set to skip - Property is skipped

- Mode set to link - Property value contains an array of document identifiers

- Mode set to embed - Property value contains array of nested documents

-

Many-to-one relationship

The source table contains a foreign key

The target table contains a primary key- Mode set to skip - Property is skipped

- Mode set to link - Property value contains a single document identifier or null

- Mode set to embed - Property value contains single nested document

Embedded documents do not contain an identifier, as it is redundant in this context.

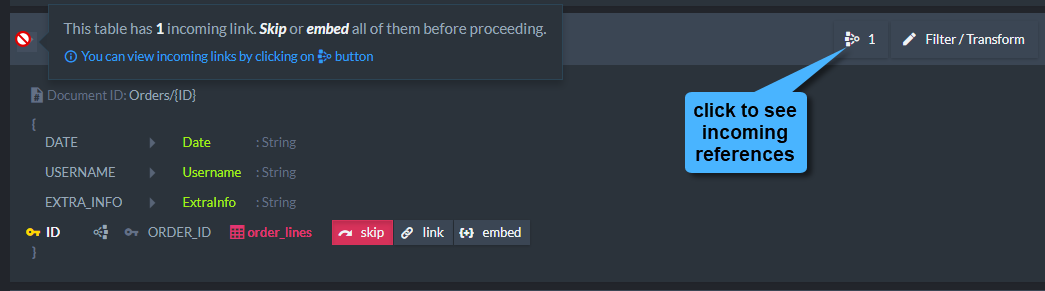

Data Integrity Helpers

The SQL migrator tries to maintain model integrity throughout the process, applying the following checks.

Table cannot be deselected when at least an incoming relationship is defined.

Table is in use by incoming references

An embedded table can be deselected.

If the table is embedded and is not used by any other relationship, the migrator

will suggest deselecting root collection.

E.g., OrderLines was embedded within Orders:

The OrderLines collection will not be needed, since it was embedded in Orders.

Suggestion to deselect root table

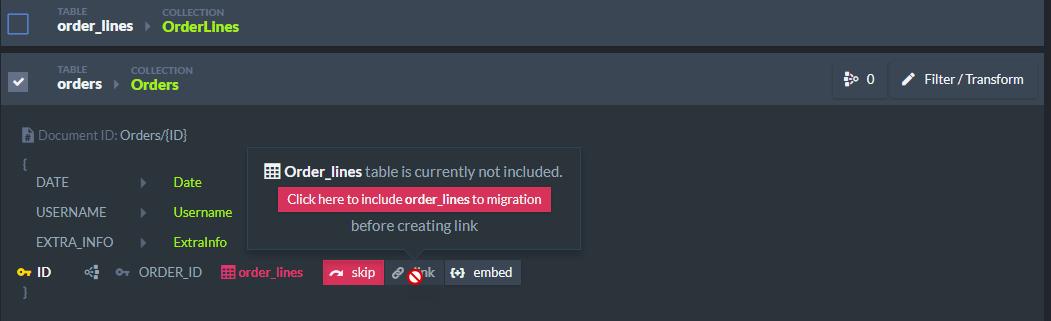

A target table for linking is not selected.

This might create a broken link, so the migrator suggests selecting a target table first.

Suggestion to select target

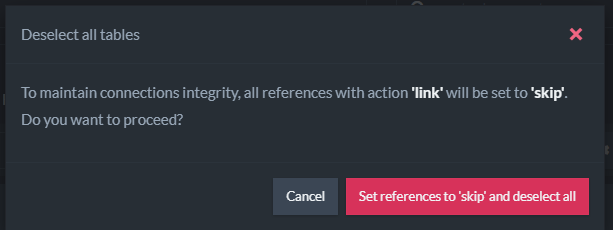

Deselecting all tables

Before deselecting all tables, the migration process asks whether the user wishes to set all relationships to skip.

Skipping all relationships